DevOps doesn’t end with your release process. When the changes you’ve made reach production, they enter the operate stage of the Salesforce DevOps lifecycle. At this stage, the focus shifts to keeping the org stable, preventing disruption for end users, preserving data integrity, and planning for recovery if needed.

While Salesforce manages the underlying infrastructure — including servers, availability, and core platform security — you’re responsible for your org’s data and metadata, including all configuration and permissions. If something goes wrong or you get audited, the Salesforce development team will need to be part of the response, not just IT or security.

In this post, we’ll explore why strategies for backup and archiving are essential to operational success in the Salesforce DevOps lifecycle, and how to build them into your processes to stay resilient, compliant, and ready to recover.

Stop flying blind: How Truckstop brought clarity to a complex org

What happens in the operate stage?

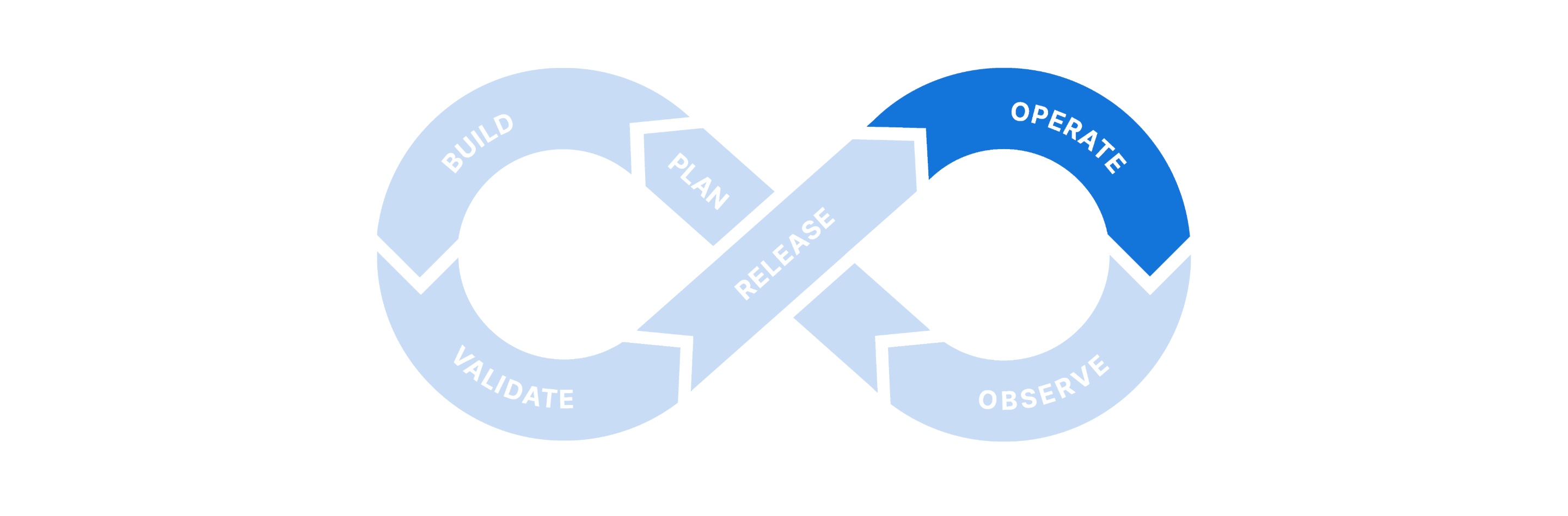

The DevOps lifecycle is a version of the development lifecycle that covers planning, building, validating, releasing, operating and observing on Salesforce — a continuous process that helps teams deliver faster and more safely at scale.

In the operate stage, your Salesforce org is live and in use. End users are working with the features you’ve delivered, while the development team’s role is to ensure the right processes are in place to maintain stability.

For Salesforce teams, that means managing user access, running backup jobs, and responding fast when something breaks — whether it’s a failed deployment, a permissions issue, or unexpected data loss.

The operate stage is also where compliance matters most. This is where you need processes and audit trails in place to demonstrate compliance in response to any audits or access requests. It’s also important to maintain high data quality and smooth org performance. Archiving plays a key role here — removing obsolete data from the live environment keeps records accurate and systems running efficiently for Agentforce agents who rely on accurate, up-to-date records.

As the DevOps lifecycle is continuous — visualized as an infinity loop — the operate stage isn’t just an end goal. It’s closely related to the observe stage where you understand how well it’s running, using observability tools to detect risks early, surface trends, and plan improvements. Together, the operate and observe stages give your team the real-time control to stay stable and the long-term visibility to evolve.

Why Salesforce backup and archiving are key to staying operational

Operational success in Salesforce depends on your ability to protect data, maintain performance, and recover quickly when things go wrong. At the heart of this are two foundational functions for the operate stage: backup and archiving.

Backing up both data and metadata is vital to being able to restore data after disruptions. With reliable backups, a tested restore process, and a clear strategy in place, the best teams can reduce mean time to recover (MTTR) and build confidence in their ability to respond to issues without major disruption.

Archiving obsolete data improves org performance and helps manage storage costs, while maintaining long-term access for compliance standards and reporting.

Operational excellence means being ready to respond, recover, and improve every time something goes wrong. When backup and archiving are embedded into your daily operations, they reduce risk and create the stability and visibility your org needs.

Who’s responsible for operational success on Salesforce?

Staying operational on Salesforce is a team effort. No single role shoulders total responsibility for how things go in production. For example, admins might configure backup jobs and make sure the right data is protected, while developers might support recovery to ensure data and metadata are restored quickly from backups. Release managers reduce risk by triggering on-demand backups ahead of deployments, and compliance officers ensure retention policies meet regulatory standards.

Because metadata determines the shape and behavior of your Salesforce org, you need your Salesforce team to stay actively involved in the operate stage. They understand how configuration, automation, and permissions interact — and that knowledge is essential when restoring metadata or resolving issues in production.

Learning from real-world Salesforce operational failures

So what happens if you’re operating on Salesforce without backup and archiving in place? You’re leaving a tooling gap in the DevOps lifecycle — one that jeopardizes your development and data security.

It’s impossible to avoid failures completely. Operational excellence means being ready to respond, recover, and learn when things do go wrong. And in the Salesforce ecosystem, things can go wrong. In November 2024, a database maintenance change impacted orgs hosted on some North American and Asia Pacific data centers, leaving some users temporarily unable to log into Salesforce or access its services. A similar incident, in 2016, caused by a database failure on the NA14 instance, was resolved by restoring from a backup and meant that users lost four hours of data.

In May 2019, an accidental permissions change led to “Permageddon” — an incident which briefly exposed sensitive org data and locked users out of critical functions. Salesforce deleted all affected permissions, protecting sensitive data but resulting in some admins needing to rebuild their org’s profiles and permissions manually. In September 2023, another internal permission change blocked customers across multiple clouds from accessing their services for several hours in peak business hours.

These incidents show that while you can’t prevent every disruption, strong backups and tested restore processes are essential for minimizing the impact of data disasters or human error — and for recovering quickly and confidently.

To run resilient orgs, high-performing Salesforce teams invest in backups they can trust, and tools that make restoration fast, reliable, and audit-ready. When it comes to protecting your most critical business data, it pays to be prepared.

What mature Salesforce operations look like in practice

Here are the key areas every Salesforce team should care about if they want to build operational excellence into their DevOps process.

Make sure backups and archives are compliant

Operational maturity means staying audit-ready at all times, and that only works when compliance is built in from the start, not added on as an afterthought. If your data protection practices need to meet GDPR requirements, that means defining clear processes for selective data purging versus retention, automating anonymization where appropriate, and ensuring your archiving policies align with legal obligations.

For industry-specific compliance frameworks such as HIPAA, you’ll need to secure sensitive data through encryption, maintain detailed audit logs for access and deletion events, and follow a clearly defined standard operating procedure (SOP) that retains records for six years. And if your organization is subject to SOX, you’ll need immutable backups and archives, along with quarterly restore drills that demonstrate you can recover critical data on demand when auditors need proof.

Don’t forget metadata backup

Restoring objects and records won’t help if your automation, layouts, or permissions are missing. And even more fundamentally, you can’t restore records to an object if the object itself has been lost or corrupted. Metadata determines how your Salesforce org works, so make sure it’s protected alongside your data.

Ensure consistent backups and archiving with automation

Manual backup processes are hard to keep up with, don’t scale, and leave too much room for error. Automating your backups and archive runs ensures consistent coverage, reduces overhead, and limits the impact of manual errors — without depending on someone to remember.

Test your disaster recovery plan regularly

If you’ve never tested a restore, you don’t really have a meaningful backup process. Set up regular restore tests — typically to a sandbox, not production — to validate your backup strategy, improve MTTR, and give your team confidence to recover fast under pressure.

Improve data quality by archiving legacy data

Archiving is an opportunity to improve the quality and relevance of your live data. Removing outdated or redundant records from the active environment helps ensure that reports, dashboards, and AI-driven tools like Agentforce are working with the most accurate, up-to-date information. Cleaner data means faster queries, more reliable insights, and better outcomes for agents and end users.

Maintain documentation on data management

Document your backup and retention SOPs to build a clear, accessible guide for your backup, archive, and recovery practices. This should cover what gets backed up, how long data is retained, who has access, and how to run a restore — and which service level indicators (SLIs) you track to measure success, like backup success rate or MTTR. Incorporate your disaster recovery and incident response plans here, so the team has a single source of truth when urgent action is needed. A well-documented data archiving strategy ensures your team can manage storage and compliance with confidence.

Measure your operational performance with monitoring tools

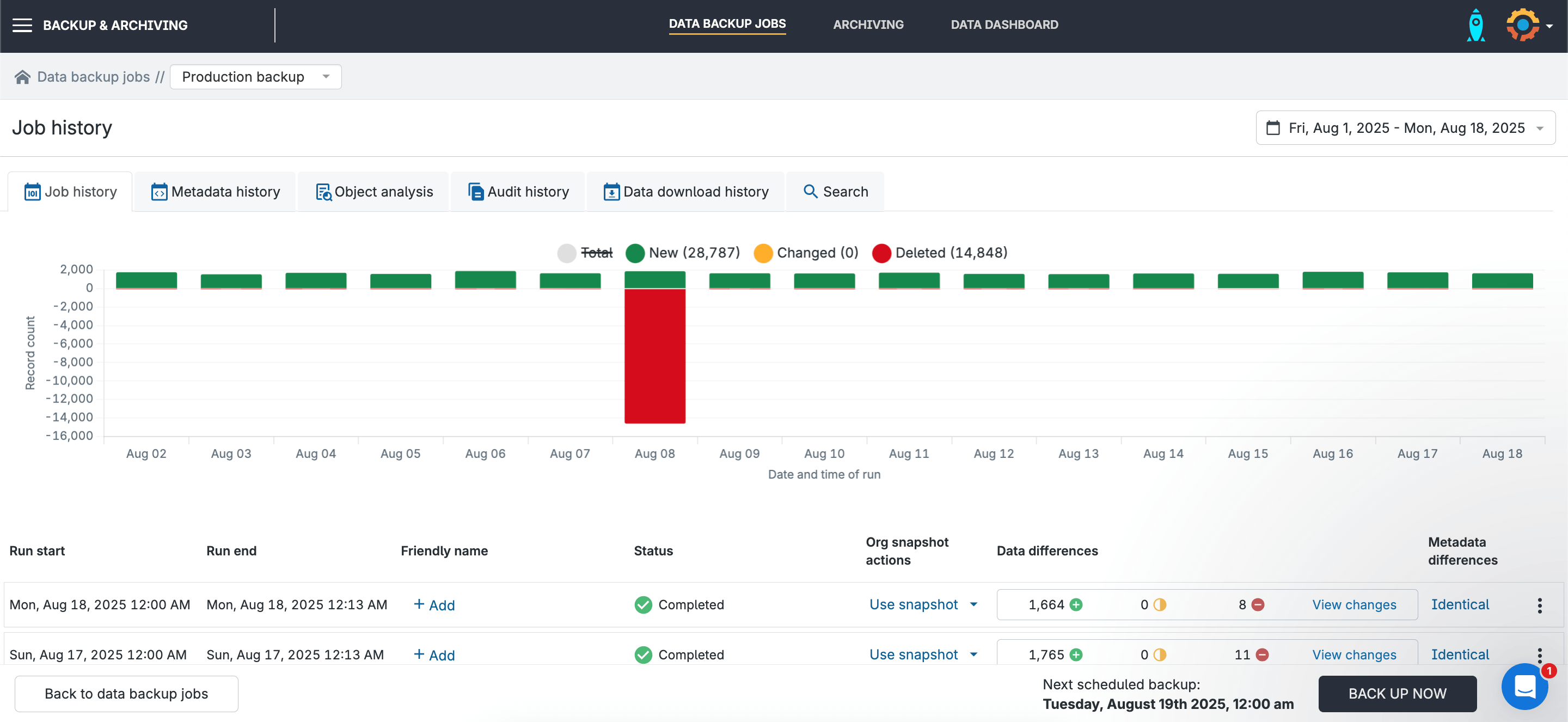

Effective planning for the operate stage means identifying the right metrics to track and setting clear alert thresholds. This ensures that failures are detected early and acted on immediately:

- Backup success rate: Are your jobs completing as expected?

- Mean time to restore (MTTR): How long does recovery actually take?

- Recovery Time Objective (RTO): What’s the maximum acceptable downtime?

- Recovery Point Objective (RPO): How much data loss is tolerable and how recent is your last usable backup?

- Anomaly detection lead time: Are you catching data loss or corruption before users do?

- Backup job health monitoring: Are failures being flagged immediately?

These metrics shift your team from reactive to proactive, giving you early warning signs and the insight to improve over time.

5 questions to help embed archiving and backup in your Salesforce DevOps lifecycle

Operational excellence isn’t a one-time setup. By regularly asking the right questions, you can surface blind spots, sharpen your response plans, and keep improving how your team operates.

Here are five questions to help you review your operate stage:

- When was our last successful restore, and what did we learn? Treat every restore as a chance to validate process and uncover gaps.

- Are backups triggered automatically in our CI/CD pipelines? Especially for teams deploying larger changes periodically, having regular metadata backups in place means you’ve got a reliable restore point if something goes wrong in production.

- What is our acceptable RTO and RPO and have we tested them? Recovery time and data loss tolerances are only useful if you’ve validated them under real conditions.

- Which anomalies did our backup alerts catch this quarter? Alerts should lead to action. Reviewing what was flagged — and how the team responded — helps you figure out your thresholds and prevent future incidents.

- What process improvements have we made since the last incident? Incidents are opportunities to gather insights and improve. What changed as a result? What’s easier, safer, or faster now than it was before?

Stay operational with Gearset

Because it’s built to support the complete DevOps lifecycle, Gearset gives Salesforce teams more flexibility, control, and reliability in the operate stage than native tools or point solutions. Here are some of the ways Gearset supports teams to stay in control in production.

Being off-platform adds resilience

While Salesforce offers native solutions like Salesforce Backup and Salesforce Backup and Recover, many teams choose Gearset for greater flexibility, faster data recovery, and a combined approach to backup and archiving. Storing backups off-platform adds an extra layer of security — isolating your backed up data from any issues inside Salesforce itself.

Gearset also offers off-platform archiving, removing the access and automation limitations that come with native tools like Big Objects or Salesforce Archive. You control how long data is kept, where it’s stored, and who can retrieve it — with no trade-offs on compliance or usability.

Intuitive workflows join up the DevOps lifecycle

Having one system for both release and recovery makes all the difference. Gearset is a complete Salesforce DevOps platform, combining backups and archiving with release management staples like deployments, version control, CI/CD, code reviews, and monitoring.

During the operate stage, that integration matters — daily backups, org snapshots, and point-in-time restores all live in the same UI your team already uses, so there’s no tool switching or extra overhead. With everything in one place, you get a clear view of org health, audit trails, and change history.

Maximum metadata coverage leaves no gaps in protection

Unlike many solutions, Gearset backs up all your metadata and data, giving you full coverage across your Salesforce environment. Metadata backups capture your org’s logic and structure — things like Apex code, flows, and permission sets — while data backups cover records like accounts, opportunities, and custom object data. You need both to fully restore functionality and prevent disruption.

This is especially critical in complex orgs where dependencies, relationships, and automation can break without the right metadata in place. As Heath Parks, Salesforce Manager at Cincinnati Works, put it: “A lot of tools can back up your data, but what’s it going to look like when you restore it? That was when the lightbulb went on.”

Operate with confidence

Gearset’s predictable, user-based pricing for teams of all sizes means no surprise costs for restores or overage. With one platform covering data and metadata management across the complete DevOps lifecycle, you can also avoid the complexity and extra cost of managing multiple tools for your Salesforce DevOps process. And because it’s built to scale, Gearset adapts as your business grows, instead of locking your orgs into rigid storage tiers or retention models.

Want to see how Gearset’s Backup & Archiving solution works out in the real world? Hear from Jolene Mair, Gearset DevOps Leader and Salesforce Application Engineer at HackerOne, on the DevOps Diaries podcast. Or if you’re ready to see how Gearset can transform your backup and archiving strategy, book a demo and take control of the operate stage with confidence.