Salesforce’s flexibility is one of its biggest strengths, but frequent releases, custom components, and dynamic page structures can make automated UI testing a challenge.

In this post, we’ll explore the key challenges of UI testing in Salesforce and share practical solutions to help you build stable, maintainable test automation. We’ll also see why traditional automation frameworks often fail and how platforms designed specifically for Salesforce, like Gearset, can turn testing from a bottleneck into a competitive advantage.

What is automated UI testing?

Automated UI testing involves creating scripts or tests that simulate how a real user interacts with an application’s user interface (UI). In the case of Salesforce, automated UI tests are not written inside the platform itself. They’re created and maintained outside of Salesforce using specialized testing frameworks or tools.

Here are a few examples of the types of tools you can use:

Gearset’s Automated Testing solution uses AI-powered screen recording and scriptless test creation to make stable UI testing accessible to any team member, without the maintenance overhead of traditional tools.

Code-based frameworks like Selenium, Playwright, or WebDriverIO allow you to write tests using programming languages such as Java, JavaScript, or TypeScript. These tests are usually stored in the same repositories as your Salesforce metadata or other project assets, versioned in systems like GitHub, Bitbucket, or Azure DevOps (ADO).

UTAM (UI Test Automation Model) is Salesforce’s open-source framework for UI test automation, built to handle the platform’s unique component structure. You define “page objects” in JSON files within your project repository and the UTAM compiler converts these into Java or JavaScript classes, which your tests call to interact with the UI.

Third-party tools like Provar, ACCELQ, Testsigma, Tricentis, and Keysight Eggplant offer a more user-friendly approach to UI testing. These platforms let you create tests in their own UIs or simplified scripting environments. Many of these tools also support version control, so your test definitions can live alongside your Salesforce metadata or deployment scripts. Because the vendor platforms handle much of the underlying setup and execution, you write less custom Apex or automation code and can focus on validating how your Salesforce processes behave for users.

Once written, automated tests are typically executed against a Salesforce org, which could be a sandbox, scratch org, or another test environment. In Gearset’s CI/CD pipelines, you can set these tests to automatically trigger after each deployment.

Standardizing Salesforce delivery in Manufacturing: Lessons from a release team

Common challenges when automating UI tests in Salesforce

Let’s unpack the most common obstacles teams face when automating UI tests in Salesforce and the impact they can have on the stability and reliability of your test suites.

Dynamic element IDs and locators

Salesforce doesn’t assign stable, predictable names to UI components like buttons or fields. Instead, it generates temporary identifiers that change. For example, an identifier like 60:220;a may become 90:220;a. If your automated test relies on finding a specific element by its ID, it will immediately fail because that identifier has changed. This can make it difficult to write reliable tests that consistently interact with the correct elements.

Salesforce doesn’t provide a built-in way to add stable test identifiers to its standard components, like the data-testid attributes commonly used in web development. Developers can include custom attributes in their own Lightning Web Components (LWCs), but not in Salesforce’s managed or out-of-the-box ones.

On top of that, Salesforce’s Lightning framework is built on a deeply nested page structure, where UI components like buttons and fields are often contained within multiple layers of other elements. This can make it difficult to craft precise selectors that target a specific element. If you write a test to click a button, your selector might unintentionally match multiple buttons on the page due to the way the page is structured. This means your tests might fail, interact with the wrong elements, or cause other unexpected behavior. Tools like UTAM, Provar, and ACCELQ tackle this challenge with metadata or model-based locators instead of brittle, direct selectors.

Shadow DOM and Lightning Web Components

Modern Salesforce pages are built using Lightning Web Components (LWCs), which rely on the Shadow Document Object Model (DOM) web standard. The Shadow DOM encapsulates components so their internal HTML, CSS, and logic are hidden and don’t interfere with anything outside the component. That encapsulation exists to keep pages stable and predictable: a component’s styles and markup can’t be accidentally overridden by the rest of the page, and the component can change internally without breaking whatever uses it.

For testers, this creates problems. An automated test can see that a component exists, but it can’t directly access the buttons or fields inside it because they sit behind the Shadow DOM boundary. Some components only load after interaction, such as clicking a tab or scrolling a section into view, so tests need to wait for them to appear before they can interact. Salesforce also uses iframes to render Visualforce pages and some third-party apps, which means tests have to switch into the correct frame before they can work with the content inside it.

Automation tools handle these challenges in different ways. Selenium usually needs custom JavaScript to reach elements inside the Shadow DOM, while Playwright supports Shadow DOM natively, reducing the maintenance burden and complexity of test scripts.

Frequent platform updates

Salesforce rolls out three major updates every year (Spring, Summer, and Winter) automatically to all orgs, along with periodic patches and critical updates. These updates can change how pages and components behave, which means even a stable test suite can start failing after a new release.

To help with this, Salesforce offers a sandbox preview, where teams can refresh or create sandboxes to get new features and updates before other environments. This early access gives teams a chance to test and validate their processes, including automated UI tests, before the changes go live. Unfortunately, these can contain early-stage bugs and temporary inconsistencies in metadata or Lightning components, which can cause false test failures or deployment issues between preview and non-preview environments. UI tests, in particular, can be fragile if the release introduces layout or DOM adjustments that Salesforce later patches during the preview phase.

Because of this release cadence and the challenges of sandbox preview, teams need to continuously monitor Salesforce updates and adjust their test automation to stay reliable. The rapid pace of change adds ongoing maintenance work and makes long-term test stability a constant challenge.

Complex page loading

Salesforce pages don’t load all at once. When a Lightning page opens, the browser first loads the layout, then initializes the Lightning components, and finally runs background calls to pull in data. Because of this, your automated tests can’t just assume the page is ready the moment it appears, they need to wait until everything has finished loading and is actually usable.

A common problem is that a test might find a button on the page that may not be fully interactive because their associated JavaScript code is still running in the background. The button exists in the DOM, but it isn’t ready to be clicked yet, so the test fails.

To deal with this, automated tests need to be able to wait for all related processes, like JavaScript execution or data loading, to be completed. Without that kind of synchronization, even well-written tests can break for no obvious reason.

Multi-org variations

Each org, from development to production, can have its own configuration, custom fields, page layouts, and permission sets, which makes it tough to build UI tests that run reliably across environments. Even small differences, like a hidden field or a stricter validation rule, can cause a test to fail.

Every custom object, field, workflow, or Lightning component adds more moving parts. A process like lead conversion might follow dozens of possible paths, depending on user roles or business rules, so a single test can’t easily cover every scenario.

Declarative tools like Flow Builder also add “hidden logic” behind the scenes. These automations can update records or call external systems without anything changing in the UI, meaning your tests may pass even while a background error goes unnoticed.

Test data management

Automated UI tests only work if they run against data that looks and behaves like the real thing. If your tests use records called “Test User” or “ABC Company,” you won’t trigger the same validation rules, Flows, or automation your users hit every day. That means your tests might pass, even though the real experience would break.

Privacy laws like GDPR and CCPA mean you often can’t just copy production data into a test environment. Instead, you need anonymized data that still acts like your live records and is realistic enough to trigger the same logic, without exposing sensitive details.

Salesforce’s Data Mask helps anonymize data in sandboxes, but it’s limited when you need more control over how records relate to each other.

Gearset’s data masking, in-place masking, and sandbox seeding tools go further by preserving relationships between objects — like Accounts, Contacts, and Opportunities — so your tests can run against realistic, connected datasets. This lets you safely test real user flows and automation without risking sensitive information.

User profiles and permissions

Salesforce’s UI changes depending on who’s logged in. Page layouts, buttons, and fields all vary by profile, record type, or permission set. A test that passes when run as a System Administrator might fail for a standard user, simply because the element it’s looking for isn’t visible or accessible.

Cross-browser consistency

Salesforce supports all major browsers, Chrome, Firefox, Safari, and Edge, but each one renders pages slightly differently. These small differences can affect automated interactions, especially in areas like modals, buttons, or responsive layouts.

Best practices to ensure stable UI testing automation

To reduce flakiness and keep your UI tests reliable across Salesforce updates, it helps to follow a few established best practices. Here are some practical ways to build a more stable and reliable UI test suite.

Use the page object model

The Page Object Model (POM) is a widely-used design pattern in software testing. Using this model for Salesforce UI testing, you create a corresponding class in your test code for each page or reusable component. This class acts as a centralized library, storing all the necessary information about how to find and interact with elements on that page.

The POM makes your test suite easier to maintain, read, and reuse. When Salesforce updates the DOM or changes a button label, you only need to update one Page Object instead of fixing every individual test. Well-written tests using this pattern are easier to follow, reading more like real user workflows than technical scripts. And because you can build Page Objects for standard Salesforce components, like record forms or related lists, you can reuse them across multiple tests, keeping your framework cleaner and more consistent.

The POM pattern works with frameworks like Selenium, Playwright, Provar, and Salesforce’s own UTAM framework is built around the same idea. If you’re managing multiple environments, make sure your Page Objects can handle org-specific variations like custom fields or layouts. That’ll help your tests stay stable wherever they run.

Use smart locator strategies

When writing automated UI tests for Salesforce, it’s crucial to locate elements the way a user would interact with them, rather than relying on the random identifiers Salesforce generates behind the scenes. For example, instead of targeting an internal identifier like j\_id0:j\_id1:j\_id2:saveBtn, write your tests to find the “Save“ button by its label, name, or accessibility attributes.

To further improve stability:

- Work with development teams: Add stable

data-testidattributes to custom Lightning Web Components. You can’t do this for Salesforce’s built-in components, but it’s a simple, one-time step that makes your custom elements much easier to test. - Consider metadata-aware tools: Tools like Provar or ACCELQ identify elements by their Salesforce API names (for example,

Account.Primary\_Contact\_\_c) instead of the DOM structure. Those names stay stable even when Salesforce updates the interface. - Build fallback strategies into your test framework: Have your tests try a primary locator first, then a backup if it’s not found. That way, your tests won’t fail over a minor UI change or label tweak.

Design for Salesforce’s architecture

Designing your testing framework with Salesforce’s unique architecture in mind involves considering how your tests handle timing. Rather than relying on fixed pauses, use intelligent wait strategies that monitor for specific conditions, such as checking whether the Save button is clickable. This approach keeps tests efficient and more reliable, reducing unnecessary delays and false negatives.

Build your tests in small, reusable pieces so failures are easy to trace — you’ll know which step broke, not just that something failed. And once you’ve built a stable suite, connect it to your CI/CD pipeline so tests run automatically after every deployment.

How Gearset helps you automate Salesforce UI testing

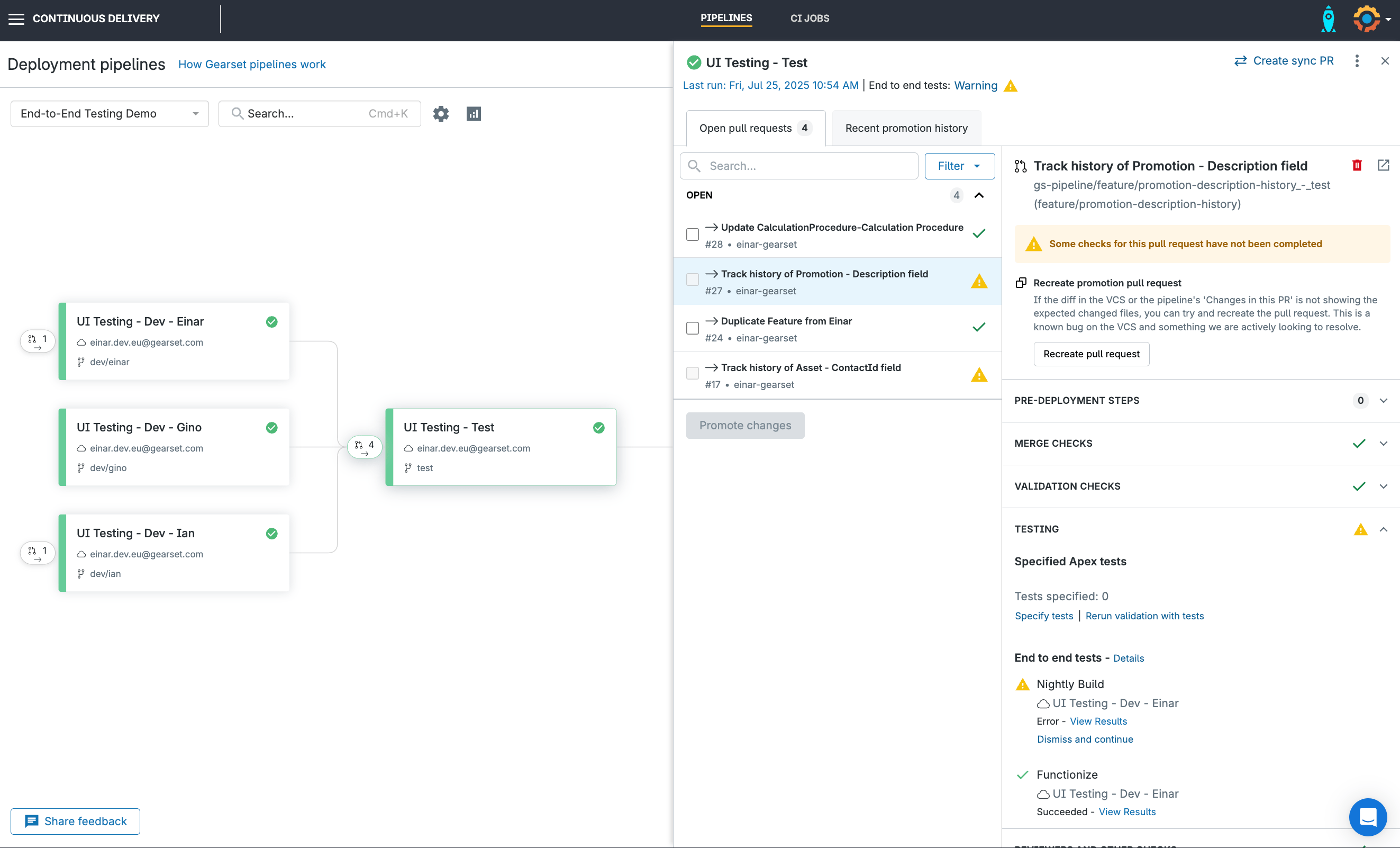

Built for Salesforce, Gearset simplifies the testing process by embedding UI testing in the full DevOps lifecycle.

Gearset’s Automated Testing: built-in, AI-powered UI testing

Gearset Automated Testing works as a standalone solution for UI test automation, making test creation easy for everyone. Better still, for customers using the full Gearset platform, Automated Testing is built into the DevOps process. Automated Testing empowers Salesforce teams to create their own tests:

- Scriptless/AI-powered screen recording — no coding required

- Understands Salesforce-specific complexity (Lightning, Shadow DOM, dynamic elements)

- More resilient to Salesforce releases than brittle locator-based tools

- Role-based testing that mirrors real user profiles and permission sets

- Native integration into Gearset Pipelines, working alongside Gearset Code Reviews

Seamless integration with third-party testing platforms

Gearset also offers smooth integrations with leading testing platforms like Provar, ACCELQ, Testsigma, Keysight Eggplant, and Tricentis. Integrated tests are automatically executed after a successful deployment, making sure that every change is validated without the need for manual intervention or extra configuration.

Once tests are executed, results are displayed in Gearset’s Pipelines and CI job dashboard. This provides full visibility across the testing pipeline without needing to switch between different tools or interfaces.

Unified testing across the DevOps lifecycle

Gearset simplifies testing by bringing together automated unit and UI testing in one workflow, reducing the complexity of managing separate tools at different stages.

Unit tests run directly in Gearset, with a full history of results and coverage reports to help you spot recurring failures and track quality over time. You can schedule unit tests to run outside working hours and enforce coverage thresholds so only well-tested code moves forward.

UI tests fit right into the same release process. By combining scheduling, coverage gates, and integrated test orchestration, Gearset gives you a single, reliable testing workflow that keeps your DevOps lifecycle running smoothly.

Easily create UI tests and release with confidence

Explore Gearset’s Automated Testing solution to see how easily your team can overcome their Salesforce UI testing challenges. Start your free 30-day trial of Gearset today and experience full testing capabilities with no commitment. Or book a demo to see how Gearset can address your specific testing needs with a personalized walkthrough.