Gearset is always looking into different ways we can improve our users’ deployment success rate. We noticed recently that some of our users were hitting particular Salesforce errors when deploying large numbers of records, so we tuned up Gearset to make your data deployments more likely to succeed first time. In this post, we’ll look at what causes those errors and explain how we’ve gone about solving the problem for you.

What makes some large data deployments fail?

Generally speaking, larger data deployments have more knock-on effects, and these effects can occasionally cause the deployments to fail. 😞

1. Deployments with multiple batches fail because of locking conflicts

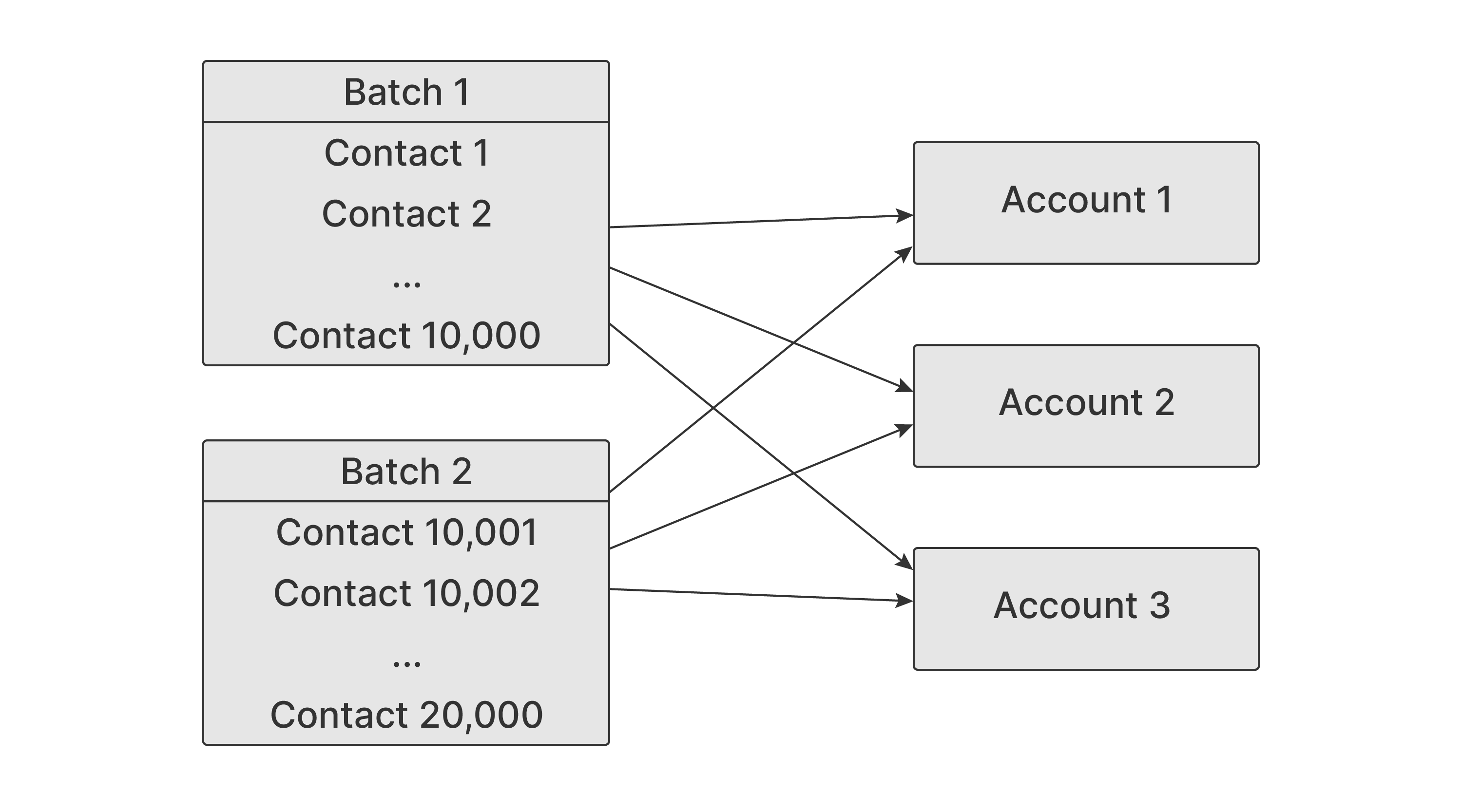

Gearset mostly uses Salesforce’s Bulk API for data deployments, which limits batch sizes to 10,000 records. This means that when you deploy more than 10,000 records to a single object, Gearset has to break these up into batches of no more than 10,000 records. For example, deploying 25,000 records to the Contact object would need three batches.

These larger data deployments can run into trouble due to conflicts caused by locking. While a record is being changed, Salesforce locks that record and any records in referenced objects, locking out any other attempt to change the records at the same time.

Problems arise when the first batch of a deployment locks any records that subsequent batches in the deployment also need to lock. Let’s imagine a deployment of two batches, with each batch containing a Contact belonging to the same Account. If all goes well, Salesforce will perform these steps:

Batch 1:

- Lock the Account

- Update Contact 1

- Unlock the Account

Batch 2:

- Lock the Account

- Update Contact 2

- Unlock the Account

But if the records on the relevant Account object are still locked from the first batch when the second batch is deployed, then the second batch will fail. Salesforce will retry up to ten times, and then throw the error TooManyLockFailure.

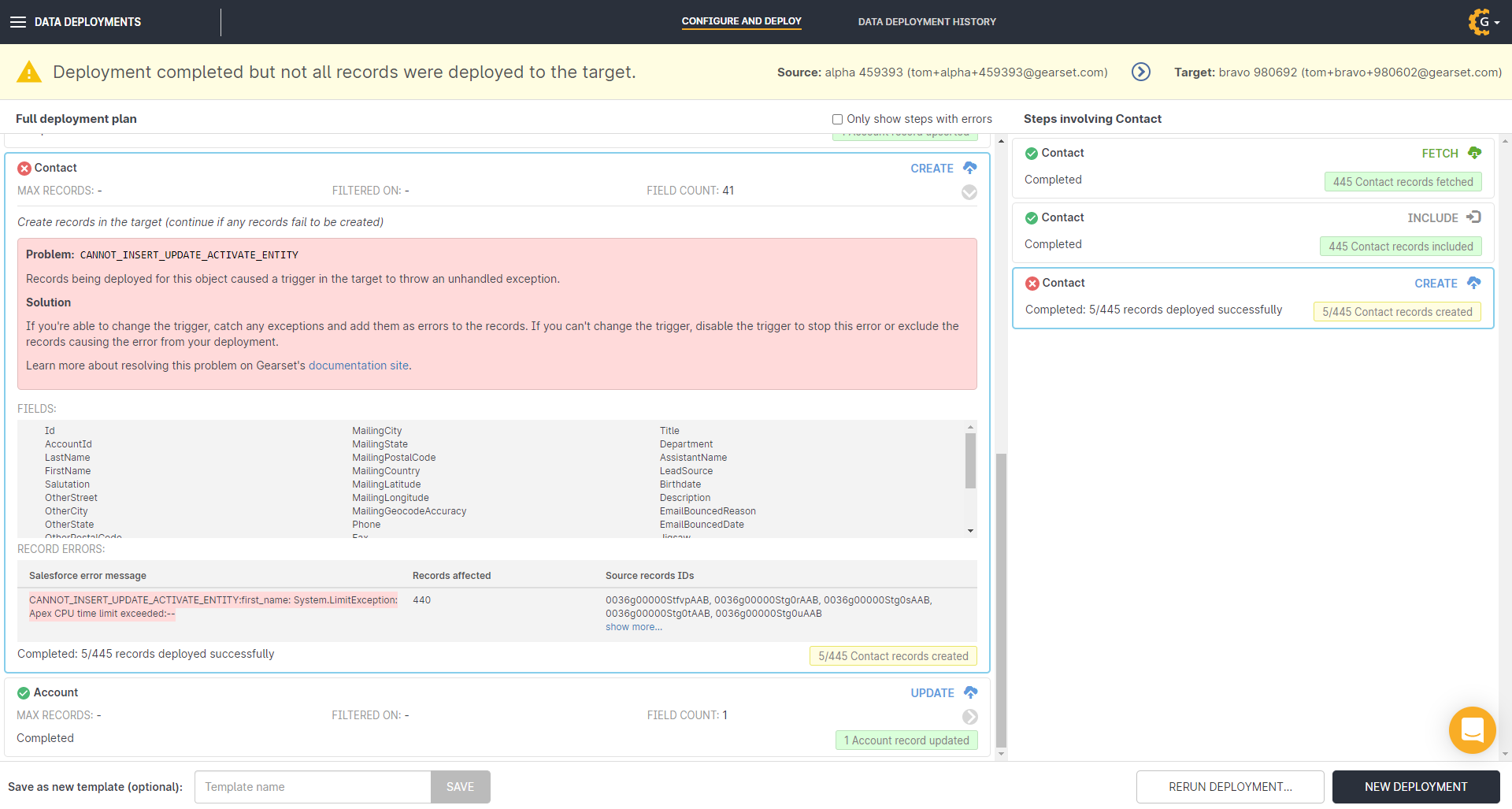

2. Deployments fail with CPU timeouts

Synchronous transactions in Salesforce have a ten-second CPU limit, and this means that even single-batch deployments can be large enough to fail because of CPU timeouts. If you have triggers set up on an object to which you’re deploying records, the deployment will trigger all of your automation. Depending on what you have set up, there could be a lot of automated processes all trying to run at the same time. Process builders or flows, for example, might even trigger further changes. As a result, the likelihood of hitting a CPU timeout increases.

For example, you might have a straightforward data deployment of Contacts. But the target object has triggers that all fire and attempt to run multiple processes when you deploy. If any of these processes reaches the CPU limit, this prevents the Contact record from being deployed. Salesforce will report this error message: Apex CPU time limit exceeded.

Solutions to make data deployments more successful

We’ve introduced two solutions to help avoid these problems and make your data deployments more successful! 🥳

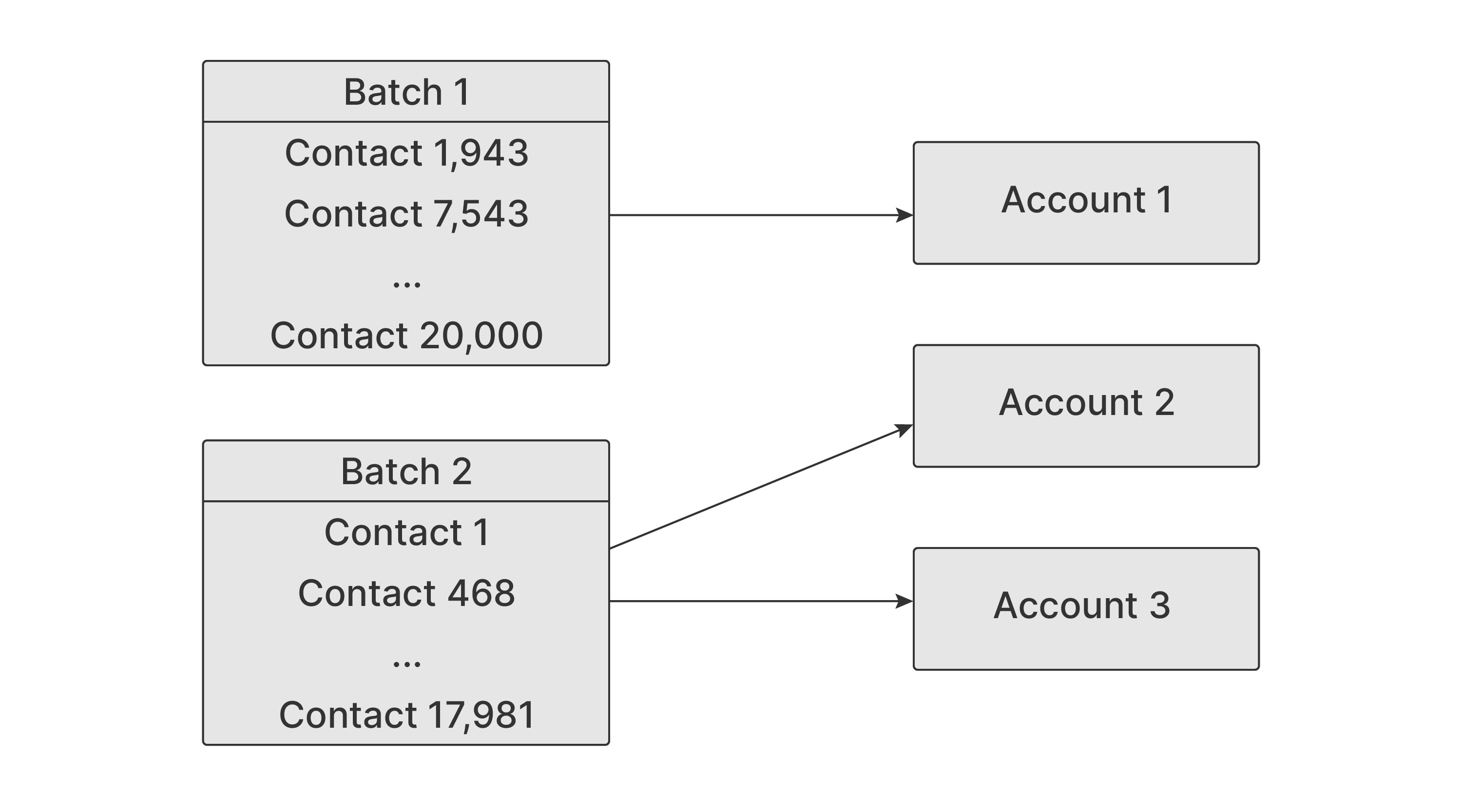

1. Batching records by target objects

Gearset now batches records specifically to avoid failures caused by locking. Previously, batches might, for example, all have Contacts referencing three different Accounts. In this kind of scenario, the second batch would have to wait for the first batch to finish, and would sometimes fail if the first batch held the lock for too long.

Gearset now batches records according to the records that they are likely to lock, making it less likely you’ll have multiple batches needing to lock the same set of records. So when you deploy several thousand Contact records, Gearset now batches these based on the Account records that the Contact records belong to. As the batches don’t need to lock the same Accounts, they don’t need to wait for each other to finish.

This is a change we’ve made behind the scenes. You should notice that your data deployments are more successful without you needing to do anything.

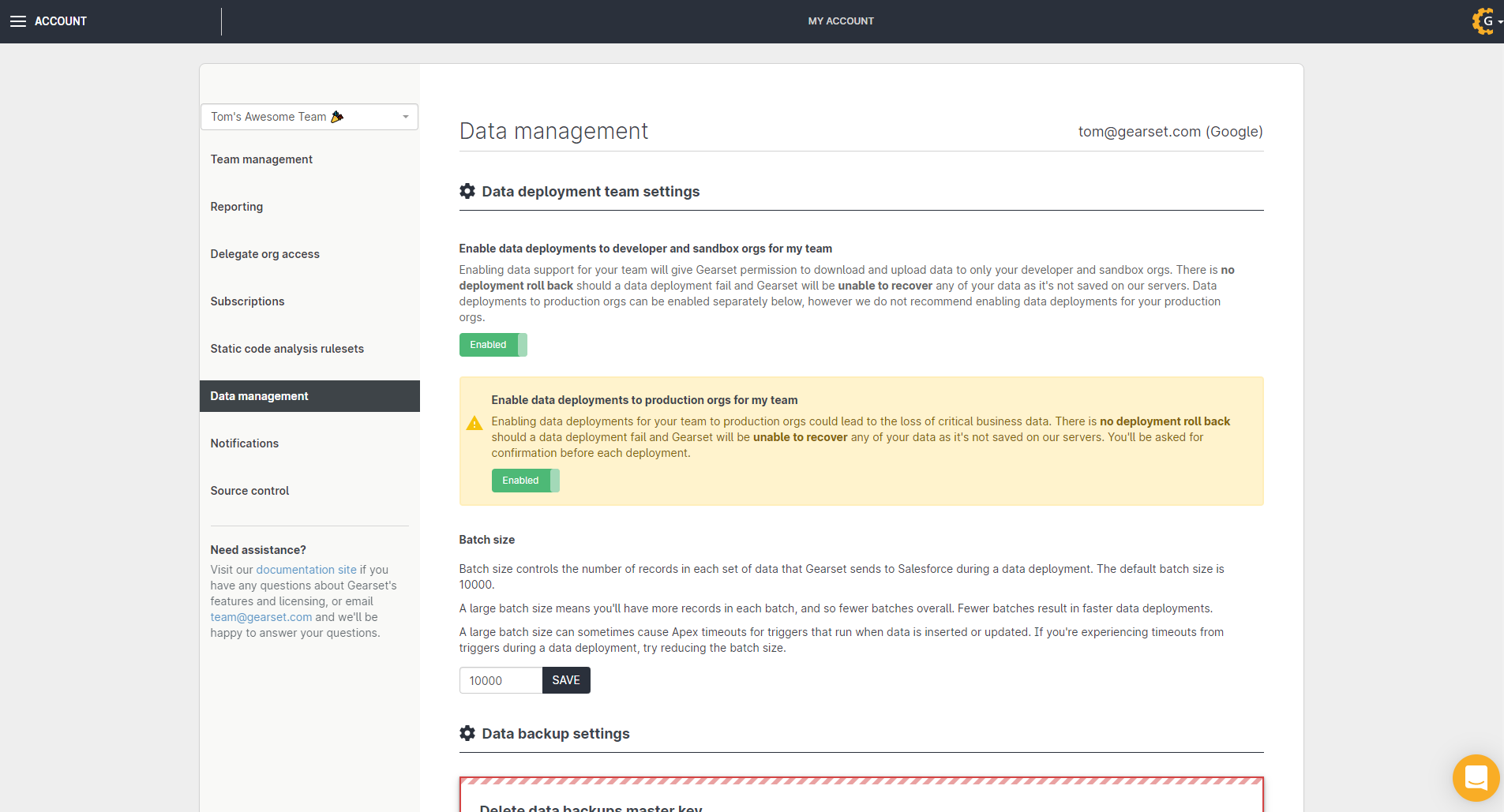

2. Giving you control over batch sizes

The second change we’ve made is to let you set batch sizes for data deployments. This will help to make deployments succeed that would otherwise fail due to large numbers of triggers. If a deployment is going to trigger automated processes, smaller batches will reduce the number of processes that try to run at the same time, and this reduces the chance of deployment failure due to a CPU timeout.

Team owners can change the batch size for data deployments in Account > Data management. Batch sizes apply to the whole team. Unless you’re running into deployment errors, it’s worth keeping the batch size at 10,000 for a couple of reasons. A smaller batch size means more batches overall, so deployments may take longer. You’re also limited by Salesforce to deploying 15,000 batches per rolling 24-hour period using the Bulk API. If you try to deploy lots of records with a small batch size, you might hit this limit.

Try Gearset for your data deployments

Whether you’re sandbox seeding or migrating data between orgs for any other reason, Gearset’s free 30-day trial gives you access to the whole platform, so get started today and you’ll be able to see how easy our sandbox seeding solution makes it to deploy your Salesforce data between orgs.