The DORA metrics are the gold standard for teams wanting to assess their DevOps success and drive improvements throughout their entire development process. But what about other metrics that can help teams gain valuable insights into the business? And how can they help your team reach DevOps success?

In this post we’ll look at some measurements that your team should care about, alongside DORA, and why they matter.

What are the DORA metrics?

When we think about DevOps metrics, the first thing people often mention is the DORA metrics. This group of metrics, from the Google DevOps Research and Assessment (DORA) team, pinpoints four key metrics that measure DevOps process health:

Deployment Frequency: how often an organization successfully releases to production.

Lead Time for Changes: the amount of time it takes a feature to get into production.

Mean Time to Recover: the time it takes to recover from a failure in production.

Change Failure Rate: the percentage of deployments causing a failure in production.

These metrics have become a standard across the software development industry due to their flexibility and applicability to so many businesses and team structures.

Stop flying blind: How Truckstop brought clarity to a complex org

Throughput vs. Stability

The DORA metrics consider two important dimensions that help businesses assess their software delivery performance:

Throughput (or velocity): The speed of making updates of any kind, normal changes and changes in response to failure.

Stability (or resilience): The likelihood of deployments unintentionally leading to immediate additional work which disrupts users.

These two factors naturally pull in different directions, and focusing on only one can hurt your business. For example, if a team made all of their changes directly in production, their lead time would be 0 (because changes go straight to production). But it’s likely that their change failure rate would be very high because there wouldn’t be sufficient testing to make sure changes are correct — meaning the business ROI would suffer.

Similarly, if a team never released to production, then their change failure rate would be 0 (no changes mean no failures!), but their deployment frequency would plummet and they wouldn’t be delivering ROI to their business.

But the DORA team suggests that velocity and resilience actually go hand in hand. The best performers tend to do well on both velocity and resilience while the lowest performers do poorly on all of them.

DORA is a great place to start when measuring DevOps performance metrics, but we know that teams often want to think more directly about ROI of the whole process and delivering business value.

To help teams think beyond DORA metrics, we’ve highlighted some other key performance indicators to enhance a businesses’ overall DevOps strategy.

Other metrics you should care about: Throughput

Throughput is all about the speed that changes are delivered. A high-throughput DevOps process is great for businesses and can mean a huge increase in Salesforce ROI. The following metrics help teams see how quickly their changes are making it to the end users, and the ROI impact that it has on the overall business.

Deployment time

It makes sense that if you spend an hour validating every feature then the lead time for that feature will increase. So it’s useful to track the amount of time that validations and deployments take in each of your Salesforce environments. This can be queried via the tooling API with Salesforce, by accessing the DeployRequest object. You’ll also be able to see this information in Gearset very soon!

If you want to improve your deployment time a great place to start is your Salesforce tests. In CI jobs, Gearset has the capability to intelligently select which unit tests need to be run based on the Apex changes made in the deployment. Unit tests can be changed to run significantly faster (for example, by removing the dependence on in-built SOQL to the code being tested). By tracking your deployment time, you’ll be able to see if changes you’ve made lead to positive improvements in your deployment process and increase ROI.

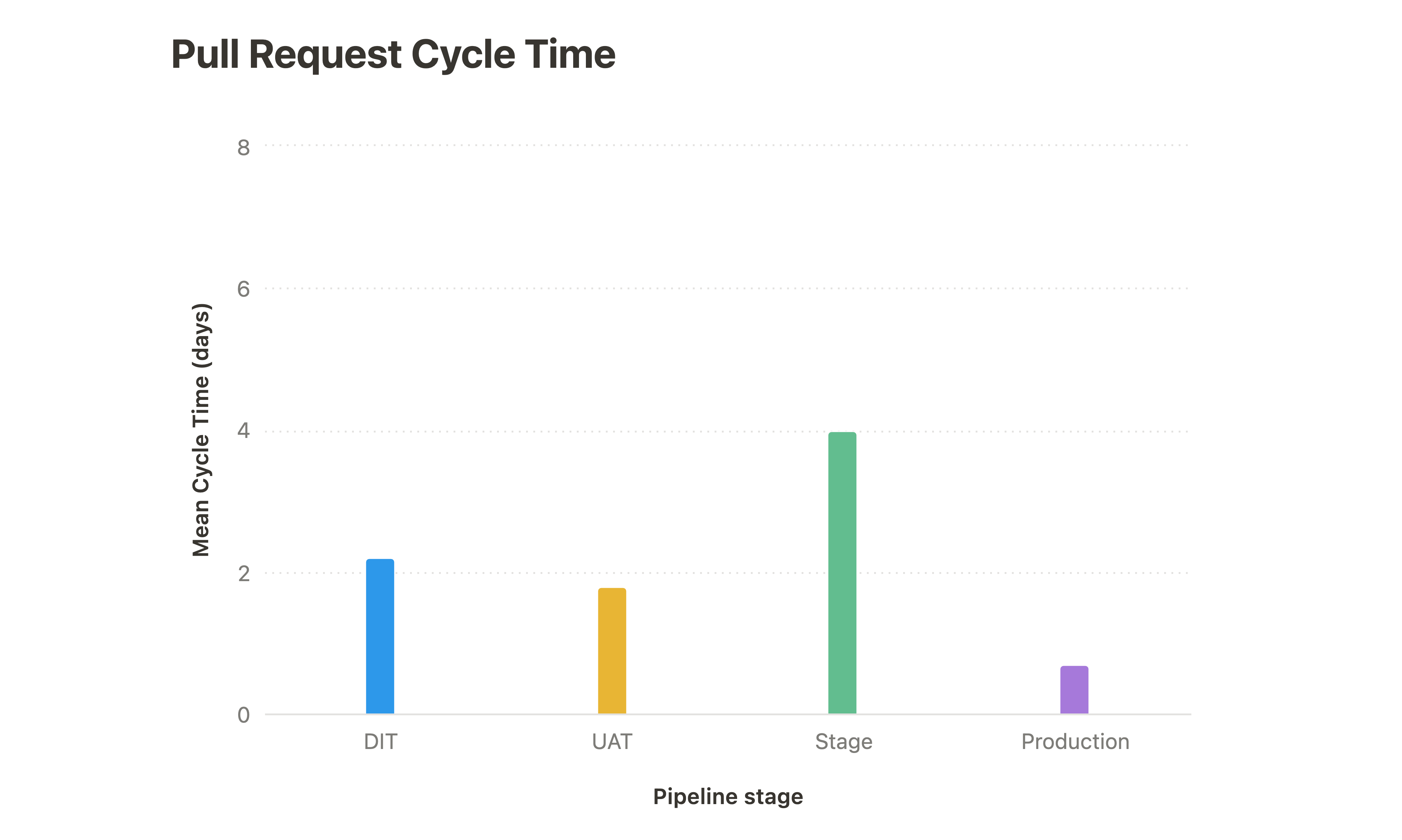

Pull request cycle time

In the context of Gearset Pipelines, pull request cycle time breaks the top level lead time for changes metric into a number of sections. It measures the amount of time that pull requests sit open at different points in the DevOps process, and pinpoints where they’re building up waiting for a team to do their action.

This can be accessed via a number of different methods, but Gearset’s reporting API provides these broken down numbers under the lead time for changes endpoint. To measure the cycle time of the different stages of your pipeline you need to get the average time between a pull request being opened and its deployment to the relevant environment. This metric will help you to identify if specific stages are becoming a bottleneck for your process and whether you can find improvements for them.

Feature cycle time

Feature cycle time is slightly different to lead time for changes because it includes the amount of time the actual change takes to be developed, not just the amount of time that the change takes to be shipped once it’s been built.

The best source of this information is your issue tracking software, like Jira, to help you track the time that the issue was first picked up by a developer to when the issue was marked as completed (either by an automatic process within Gearset’s Pipelines or with manual tracking by a product manager).

Feature cycle time gives a broad perspective over the whole cycle of feature development, and when combined with other metrics, allows you to zoom into specific areas where improvements can be made to enhance ROI.

Change volume

Change volume measures the amount of changes that are released during a set period. This is one of the ultimate measures of ROI across your development process, because users care about how many changes are being released — not just the frequency of your deployments. This metric depends on far more than just your DevOps process as it also encompasses product management, developer experience and code base health.

In order to measure this metric over time, it’s useful to record story points against different features in your issues tracking software. Tools like Jira allow you to then access this information and see the number of completed story points over time.

Other metrics you should care about: Stability

Throughput is a great metric for teams to start measuring, but it’s just one side of the coin. The stability of the Salesforce platform is also a key contributor to overall ROI for a business. In order to use these metrics you’ll need to record and measure more information — but the outcome is worth it.

Impact adjust change failure rate

In the DORA metrics, change failure rate is the gold star of measuring platform stability, but we know that not all production failures are made equal. A failure which slows down a single user’s workflow is significantly different to a failure which causes an entire organization to be unable to access user data. Both could be flagged for improvement and be counted towards the change failure, but their impact isn’t equal and so should be viewed individually.

It’s common to assign a simple severity value to a production failure, for example P1 would be given to a failure which impacts a large number of users, while P4 might be a failure which is lower impact for a smaller subset of users. From there, assign a number of points to each of the P levels (10 points for P1, 1 for P4). This measures the impact of deployment failures rather than just being a number. This could then be expressed as a percentage compared to the number of deployments to get the impact adjusted change failure rate.

Here’s an example:

| Issue severity | Number of users affected | Severity of impact | Points |

|---|---|---|---|

| P1 | All Salesforce users | Unable to use the platform | 10 |

| P2 | All Salesforce users | Unable to complete core workflows | 5 |

| P3 | Specific team(s) | Unable to complete core workflows | 2 |

| P4 | Specific individual(s) | Core workflows slowed down | 1 |

So if you have done 100 deployments in the last month, with 1 P1 issue and 2 P2 issues, then you would have 20 issue points giving a 20% impact adjusted change failure rate. Although this number may not paint the full picture by itself, it can be used for analysis on how impactful any failures continue to be and also to compare over time.

Rework rate

This metric was introduced in the 2024 DORA report and is the percent of deployments that “weren’t planned but were performed to address a user-facing bug in the application”. When combined with change failure rate the two metrics provide a reliable factor of software delivery performance and stability.

This can be measured by counting the number of issues marked as “bugs” in your issue tracking software, rather than “feature development”. Track how many of these issues caused unplanned deployments. A high rework rate suggests that the current process design (from code base to review process) allows bugs to sneak in and requires developers to go back and change their work on a regular basis. This hampers not only stability for users, but also the throughput that a development team can achieve.

A high rework rate can also affect team health, as team members become frustrated by needing to constantly redo work that they felt was complete. This has a wider effect on team morale and could affect the reputation of the team to other internal stakeholders.

Defects escape rate

Sticking with the concept of “not all failures being equal”, we know that a failure in SIT (System Integration Testing) is less of an issue than a failure in production. So if we’re able to measure the number of features which are rejected at earlier stages like SIT or in UI tests, we can get a sense of how effective testing is at detecting defects.

A simple measure, like (Number of Defects Found in Production / Total Number of Defects) × 100, is called the “defects escape rate”. If this number is high it can indicate a problem like:

Your testing environments aren’t fit for purpose because of unmet data requirements.

Manual testing methods aren’t effective because they’re not covering realistic workflows.

Randomness in testing is causing the tests to become flakey between environments.

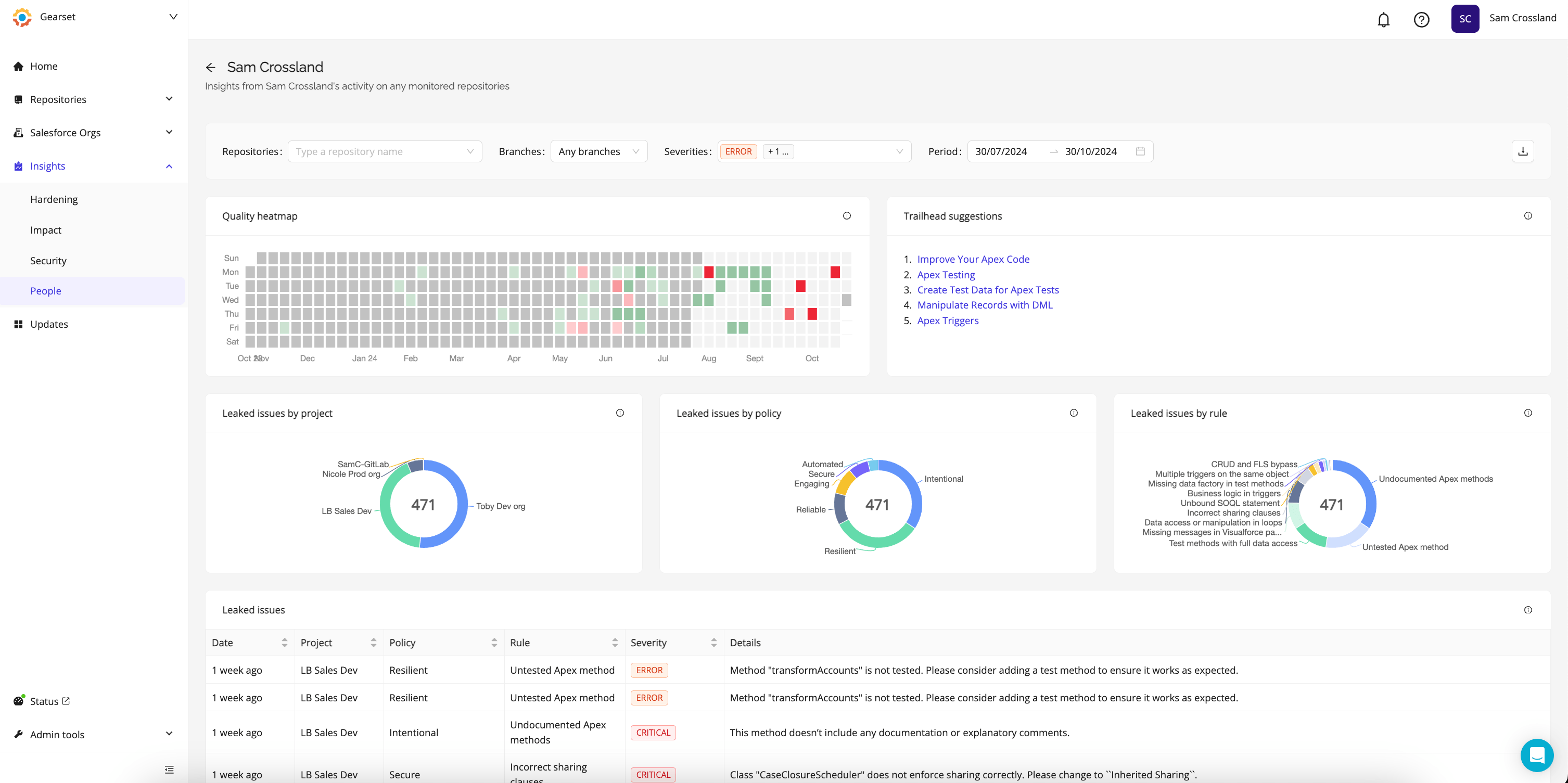

Code quality

This metric assesses the quality of the code written by the development team and also the code in the overall code base. By combining two specific approaches, we not only establish objective measures for code quality but also identify areas for improvement.

The first one is simple — ask the developers. The developers are the ones who exist in the code base most of the time and will have opinions if they feel the code is easy to work with or if they find they have to work around the structure to achieve basic goals. These are conversations which should be ongoing as part of collaborative conversations in sprints, but also if you track them over time they can give good quantitative signals for code quality. Some questions to get you started are:

How would you describe the overall quality of the codebase?

How often do you encounter issues with unclear or overly complex code?

How easy is it to add a new feature or make changes without causing bugs in other parts of the code base?

This developer-led approach can be combined with more subjective tools like automatic code analysis. Code Reviews offers continual repository scans to identify security issues and areas where the code could cause vulnerabilities in the pipeline. Specifically tracking the number of errors through Code Reviews will allow quantitative measures of code quality over time. With Code Reviews, you can also use metrics to measure organizational health.

These four measures combined with the existing DORA metrics provide the ability to not only better understand the stability of your Salesforce platform, but to identify actionable areas where it can be improved.

Measuring success beyond DORA

The DORA metrics are great at providing teams actionable insights encouraging a healthy balance between speed and stability. But by integrating these additional metrics alongside the DORA metrics, teams can gain a comprehensive view of their entire development process. It’s also a good idea to combine DORA metrics with observability practices. This lets you see both the results (DORA metrics) and the underlying processes that are driving those results, so you can make more effective improvements.

This holistic approach empowers teams to pinpoint areas for improvement, refine workflows, and boost overall performance. DevOps is an evolving way of working that thrives on continuous improvements and adaptation. By adopting these broader metrics, your team can ensure sustained growth, enhanced platform stability, and a bigger ROI for your organization. You can find out more about what you can track using Gearset’s API, or find how your team is more generally tracking for velocity/resilience on our DevOps maturity assessment today. Or if you want to learn more about DORA, read the key takeaways from the 2024 report.