You log in to Salesforce and head to the System Overview page — only to be greeted with a 95% storage warning. Reports are failing, list views are timing out, and frustrated users are flooding your inbox. After some digging, you discover the culprit: EmailMessage records are taking up 18% of your storage, bloated by years of HTML emails and attachments.

At this point, one thing is clear: you need to free up space fast. But that raises the big question — what’s the best way to archive this data without disrupting your users or losing critical information?

At first glance, Salesforce Big Objects can be an attractive choice. They’re native, designed for scale, and available without additional licensing. But when it comes to complex archiving and data restoration, you’ll find that Big Objects require more extensive development work, have strict indexing requirements, and don’t support automation or standard Salesforce reporting.

If you’re dealing with storage issues, this guide is for you. We’ll break down what options you have, including Salesforce’s Big Objects, what it really takes to use them effectively, and why they may not always be the most practical choice for long-term data archiving.

Standardizing Salesforce delivery in Manufacturing: Lessons from a release team

Understanding Salesforce Big Objects: Technical foundation

Salesforce Big Objects are a special type of custom objects designed to hold massive amounts of data, often billions of records, within your org. But they don’t work the same way as your standard Salesforce objects. Instead of using the usual database that powers most of your org, Big Objects are stored in a system built specifically for massive scale.

Big Objects are non-transactional, so partial write failures are possible and expected. Salesforce recommends implementing your own retry logic to handle these failures, but keep in mind that this requires additional development and testing to ensure your data loads are reliable.

Big Objects vs. External Objects

It’s easy to confuse Big Objects with External Objects, but they serve very different purposes — and it all comes down to where your data lives.

Big Objects keep your data inside Salesforce, but in a storage system designed for scale rather than day-to-day access. They’re a good fit when you need to archive large volumes of historical data and keep it within your org.

External Objects, on the other hand, don’t actually store data in Salesforce at all. Instead, they display data from outside Salesforce using OData connections. They’re great for integrating external systems, but they’re not designed for archiving data you want to keep securely within your org.

Creating and setting up Big Objects

Setting up your first Big Object isn’t as simple as clicking a button. Unlike standard objects, which you can create through the Salesforce UI, Big Objects require either a Metadata API deployment or manual setup through Setup > Big Objects.

This process involves defining fields, setting up indexes, and deploying. Unlike relational databases, you’ll also be using methods like Database.insertImmediate() for synchronous operations (which come with governor limits) or Database.insertAsync() for large-scale loads.

The 5-field index limitation

When you create a Big Object, you’re allowed one compound index with up to 5 fields. Indexes are crucial when you’re archiving, because they determine how you’ll be able to search and retrieve your data later. You can’t modify the index after the object is created. This can cause you problems down the line during data recovery scenarios where you may need to search for data based on different criteria. If you make the wrong decision here, you’ll need to start over — there’s no quick fix.

Accessing Big Object data

Until Summer ’23, Salesforce offered Async SOQL (Salesforce Object Query Language), a feature that let you run large-scale queries on Big Objects in the background. It’s retirement leaves you with two ways to access Big Object data:

Batch Apex allows you to process large datasets in manageable chunks. This requires careful management of governor limits and error handling.

Bulk API is ideal for extracting data to external systems for reporting or analysis, but comes with its own set of complexities, such as managing authentication, handling large CSV files, dealing with API rate limits, and transforming data into a format your target system can consume.

With Async SOQL gone, you can’t just run a query and wait for the results to land in your inbox anymore. Instead, you’ll need to build and maintain batch jobs or external data pipelines — adding even more complexity to an already challenging implementation.

Using Big Objects for archiving: What to expect

Moving millions of records out of production sounds simple enough. But with Big Objects, the reality is more complicated. Without careful planning and a clear understanding of the limitations, you can end up with data that’s hard to query, slow to restore, and expensive to maintain. Here are some things to consider if you want to use Big Objects for your archiving:

Understand Big Objects’ use cases

Big Objects are designed for very specific scenarios, typically data that’s written once and rarely accessed — think audit trails (Field Audit Trail) or sensor data.

If you need to quickly restore data for active business operations — such as supporting customer service, sales, or any other department that requires frequent access — Big Objects are not the right solution.

Calculate the total cost of storage. Big Objects are available by default in Salesforce Enterprise, Performance, Unlimited, and Developer Editions. But if your org needs to archive more than the one million records that are included by default, you’ll likely need to purchase additional capacity.

You’ll also need to take into account the cost of development. Schema design, index planning, batch job development with retry logic, and custom UIs for accessing data all take time. Restores, in particular, can be complex and resource-intensive. While the storage itself may appear inexpensive, the operational overhead can add up quickly.

Consider record IDs and relationships

In standard Salesforce objects, every record has a unique 18-digit ID that keeps relationships between records intact — for example, Accounts know which Opportunities belong to them, and Opportunities know which Line Items they own.

Big Objects work differently. Instead of those familiar record IDs, they use internal identifiers that you can’t query in the same way. This makes it harder to restore data to its exact original state if you ever need to re-insert it back into standard objects.

This means that when you archive an Account along with its related Opportunities into Big Objects, the relationship between them is lost. Without the standard Salesforce ID, you can’t easily query or restore those records and their relationships back together.

You can’t simply “restore” an Opportunity with its related Account — you need to rebuild the entire relationship hierarchy from scratch. This manual process not only introduces risk but also creates a massive burden on the recovery workflow.

Prepare for security and compliance

Big Objects offer basic security controls — object and field-level permissions only. There’s no sharing rules or team hierarchies to customize access in a granular way. Essentially, everyone with access to Big Objects can see everything — or nothing. This can be a serious issue if you have a complex security model in place within Salesforce.

Features like Shield Platform Encryption don’t extend to Big Objects. For organizations bound by compliance regulations like GDPR, HIPAA, or financial regulations, this can trigger major compliance violations.

Big Objects can also cause frustration because there’s no built-in UI for deleting a customer’s data. Instead, you’ll need to write custom Apex code to identify and remove records based on your limited index. And, when it comes to Data Subject Access Requests (DSARs) — requests from customers to access or delete their data — Big Objects can slow down response times. Since there is no easy UI or automation to process these requests, what should be a simple task could end up taking days, not hours, to resolve.

Design for query and storage needs

When designing your archive system, one of the most important decisions is how to structure your indexes. Indexing is critical for query performance, and it’s essential to think about how users will search your data, not just how it’s structured.

For example, if you’re archiving EmailMessage records, you should index by AccountId first if users will search by account. If you run time-based reports, index by CreatedDate. Remember, once you’ve set your index, you can’t modify it — so make sure you get it right the first time. If you don’t, you’ll have to start over completely.

Salesforce also sets a 100 Big Objects per org limit, which can quickly become a bottleneck if you’re managing multiple record types.

Why teams choose Gearset for external archiving

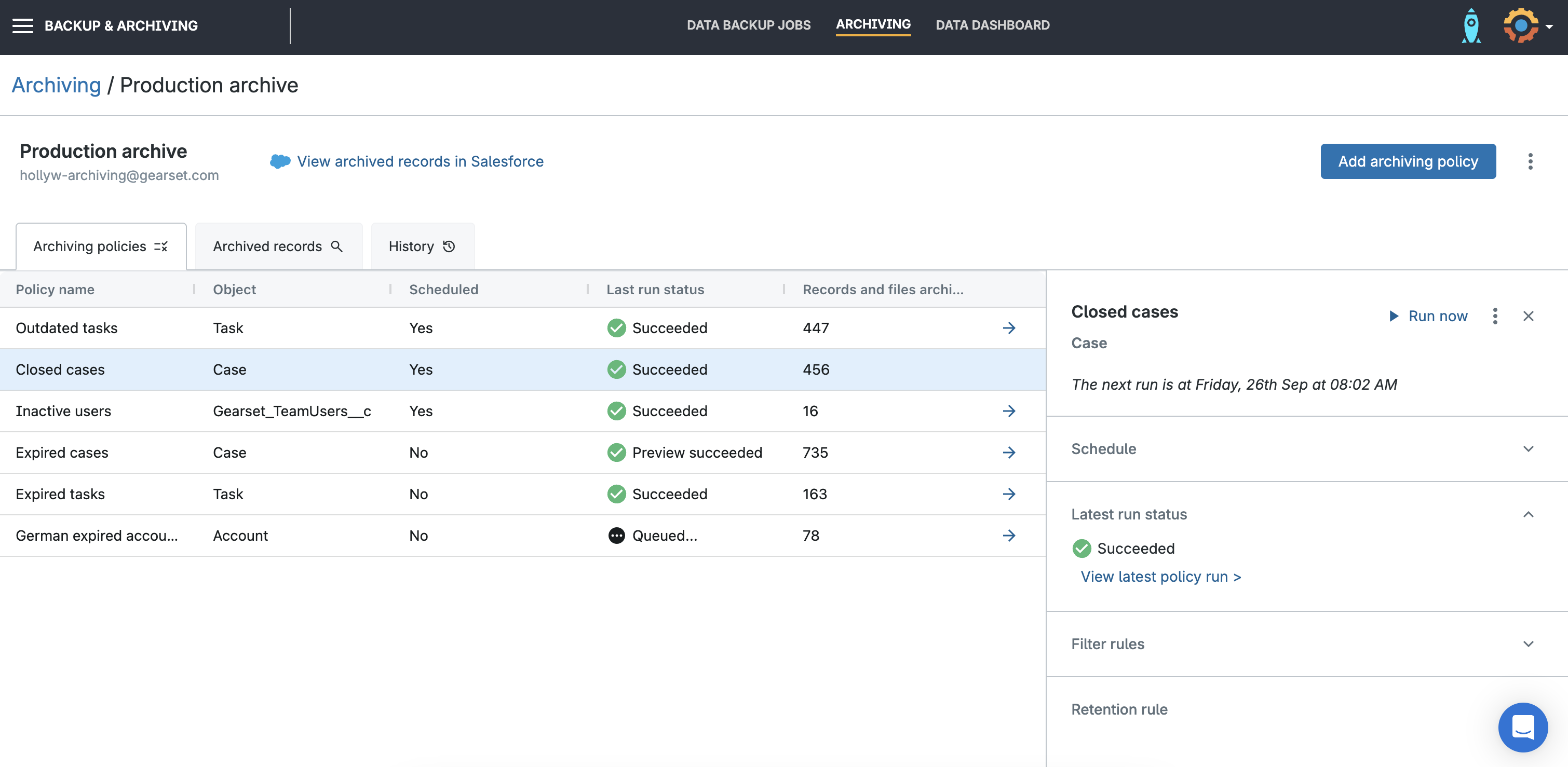

As Salesforce organizations scale, managing storage efficiently becomes more critical. Gearset’s external archiving solution offers a way to solve this problem with features and capabilities that extend beyond the limitations of Salesforce’s native options, like Big Objects.

Here’s why more teams are choosing Gearset for archiving:

True storage reduction through off-platform archiving: Gearset’s Data Archiving doesn’t just move data — it reduces storage by migrating records from Salesforce to Gearset, then deleting them from your Salesforce org to free up existing storage capacity. This approach genuinely reduces storage usage, unlike Big Objects, which keep data within Salesforce, continuing to count toward storage limits.

Unlimited storage container: Instead of paying for increased Salesforce storage over time, Gearset offers unlimited storage for archived records. This means you can avoid per-GB pricing and predict your costs based on the number of Salesforce users in your organization. No more worrying about scaling storage or fluctuating costs — Gearset simplifies your long-term planning.

Automated archiving rules: With Gearset, archiving isn’t manual — you can automate the process. Set up rules to automatically archive records based on record type or timeframe. Gearset will automatically move irrelevant data out of your org without requiring constant intervention. This ensures that your team doesn’t need to remember to archive data — it happens automatically, saving time and streamlining processes.

Preview and control: Before archiving data, Gearset lets you preview what’s about to be archived, providing full visibility. You can review and adjust your archiving jobs as needed before execution, which prevents surprises and ensures you maintain control over what’s moved and when.

Searchable archive: Searching archived records is a breeze with Gearset’s searchable archive. Retrieve records quickly by searching for anything within them, such as by record name or ID. You can even view archived records alongside their child records to maintain context. This makes audits, compliance checks, and general record retrieval efficient and hassle-free.

Files and attachments included: Unlike Big Objects, which do not support attachments or files, Gearset includes them in your archiving process. Archive data on standard objects, custom objects, and files/attachments. This comprehensive approach ensures that all your storage needs are covered in one solution.

Fast implementation: Gearset makes archiving simple to set up. There’s no software to download or install in your Salesforce orgs. Just connect your org to Gearset, and you’re ready to start archiving. There are no managed packages or custom development required, which saves time and resources.

Enterprise security: Gearset takes security seriously. We’re ISO 27001 certified and provide enterprise-grade encryption both in transit and at rest. You can choose where your data is hosted in data centers across the US, EU, Australia, or Canada. Additionally, Gearset is fully compliant with global standards like UK GDPR, EU GDPR, CCPA/CPRA, and HIPAA, ensuring that your data is always safe and compliant.

Always available: Since your archived data is stored off Salesforce servers, you’ll still have access to it even if there’s an outage within Salesforce. This feature ensures business continuity and gives you peace of mind knowing that your data is always available when you need it.

Archive viewer for easy access: Gearset’s Archive Viewer provides an easy way for non-Gearset users — like Sales and Support teams — to view archived data directly from Salesforce. This Lightning Web Component lets teams quickly access related archived records (such as tasks, cases, opportunities) without needing to restore them back into Salesforce. It’s a read-only view that ensures data stays secure while still being accessible.

The unified platform advantage: When backup meets archiving

Gearset’s complete DevOps platform offers a unique advantage by combining backup and archiving into a single solution. Rather than managing two separate systems, you only need to learn one restoration process, work with one vendor, and go through one security review. This simplicity significantly reduces risk, making your data management strategy both more effective and less complicated.

Consistent recovery regardless of data location

One of the key benefits of Gearset is the consistency of your recovery process. Whether the data you need is in your latest backup or last year’s archive, the restoration process remains identical. You’ll use the same user interface, the same workflow, and get the same reliable results every time. This consistency transforms recovery from an emergency scramble to a routine, predictable operation.

With Gearset, you can restore records quickly and reliably, whether you need to retrieve a single record or bulk data. Access controls ensure that only authorized users can archive or restore data, maintaining security throughout the entire lifecycle.

Unified monitoring and alerts

Managing both backup and archiving becomes even easier with Gearset’s monitoring and smart alerts. From a single platform you can track both your backup and archiving operations, giving you a comprehensive view of your org’s data.

Smart alerts keep you informed in real-time when there’s unusual data volumes being deleted or corrupted. We understand that “unusal” for one team is normal for another, so we let you set the limit to what’s usual for you.

Compliance without complexity

With automated retention policies, you can set up rules once and apply them universally across your data. Whether you need to retain financial records for seven years or ensure GDPR-compliant deletion after three years, Gearset automates these processes, saving you time and reducing the risk of human error.

Gearset also captures a full audit trail for every operation, ensuring that all actions are documented and accessible at any time. This makes it easier to meet compliance requirements while avoiding the complexity and manual work often associated with these tasks.

Your path to reliable data archiving

The choice is clear: Big Objects are suited for analytical data storage, but when your business relies on quick data recovery, when managed packages complicate your data model, or when users need daily access to archived records, only a purpose-built solution like Gearset delivers the performance and reliability you need.

Take the next step toward confident data archiving. Start your free 30-day Gearset trial or book a personalized demo with our Salesforce experts to experience how purpose-built archiving should work.