A new API version is a key part of each Salesforce release. As new functionality is added, or existing functionality enhanced, this is represented by new metadata types or new properties on existing metadata types.

Without careful Salesforce API management, new API versions can cause confusion. For example, you might suddenly see new differences in a comparison, or even experience blockage when a validation or deployment fails due to missing mandatory metadata. These risks are increased when sandboxes have different platform versions to production orgs.

Salesforce lets you use any supported version of the Metadata API — a tacit recognition that each new version can bring challenges as well as rewards. This feature switches control from Salesforce to the team/s responsible for the metadata and its deployment.

This in-depth guide explains how to handle API versioning, take control of the API version from Salesforce and make sure that platform releases no longer cause unpleasant surprises.

What does the API version represent?

There are separate but connected concepts around Salesforce release versions, and it’s important to understand what these are, and what they mean.

Platform version

This refers to the Salesforce release upon which any given org is running. These are referred to by the season in which they were released, e.g. Winter ‘23.

The Salesforce upgrade process starts with preview sandboxes being upgraded, followed by phases of non-preview sandboxes, and finally production orgs.

It isn’t possible to control which version of Salesforce an org runs upon; this is centrally controlled by Salesforce based on the release pattern described above. You can find out which version your org is running on at status.salesforce.com.

A new platform version brings with it new versions of all Salesforce APIs. These are all numbered and Salesforce has never released a platform upgrade without a new API version.

Metadata API version

The XML metadata representing all the components and features retrievable from and deployable to an org is versioned by that Salesforce platform version.

As new releases bring new functionality, this is all represented by additional items exposed on the Metadata API, whether that’s an entirely new metadata type, new XML properties within an existing type or new potential values for existing elements.

A single value controls the Metadata API version to be used for deployment or retrieval behavior. Typically, this is located in a file called package.xml alongside a manifest of the components to be deployed or retrieved, although where SFDX development practices are in use, it’s in a file called sfdx-project.json. We’ll refer back to these files later.

Other Salesforce APIs

You may be aware of other Salesforce APIs, such as the SOAP API, REST API or Bulk API. New versions of these APIs are also released with each new Salesforce platform version, but these are designed for interaction with data within the objects rather than for deployments.

The Tooling API is also versioned, and can be used for metadata deployments. We won’t cover the Tooling API in this guide, but it’s worth noting that any deployment tool should use the API version defined in the package.xml or sfdx-project.json file for consistency.

Flows and Apex classes also have their own API version, which is initially determined by the platform version they were first created on. Fortunately, this’t determine, for example, which version of Apex each class is compiled against — just which fields on standard objects a class or flow can access.

The Metadata API

Before continuing, it’s important to nail down exactly what the Metadata API is, and what the different versions mean, particularly with regard to the orgs themselves.

First, let’s be clear what it isn’t. The provided API version number is not a request to Salesforce to somehow change the platform version in use; as already discussed, this isn’t controlled by any Salesforce user. Neither does the API version have to match the platform version in use on the target org for the deployment to succeed. This would make deployments fragile, add in an entirely unnecessary administrative task, and ultimately make the whole concept of multiple supported API versions entirely irrelevant.

Versioning mismatches can cause deployment errors, such as:

Property ‘regionContainerType’ not valid in version 53.0Property ‘identifier’ not valid in version 51.0Property ‘filterFormula' not valid in version 51.0Property ‘itemInstances’ not valid in version 48.0Property ‘processOrder’ not valid in version 48.0- Or simply,

Not available for deploy for this API version

Salesforce provides the ability to specify the Metadata API version for retrieval and deployment, so you can take control of versioning and eliminate such problems. But this option must be used correctly.

Each component on the Salesforce platform is represented by one or more items of metadata. These can range from large monolithic XML files (e.g. Profiles or Permission Sets), to very small XML files which accompany a code file (e.g. Apex Classes), to folders containing multiple files including an XML definition (e.g. Lightning Web Components). Each of these XML files contains a number of elements describing the details and behavior of the component it defines.

Put simply, the Metadata API defines which files are known to Salesforce at any supported version, and, for each of those, which elements exist at that version, and the valid values for those elements.

For example, Lightning Web Components were made generally available by Salesforce in the Spring ‘19 release, which introduced v45 of the Metadata API. This means that any attempt to retrieve or deploy Lightning Web Components using a Metadata API version lower than v45 will fail, as the relevant type (LightningComponentBundle) didn’t exist on the API at that time.

The critical point to note here is that an org will always be on the platform version that Salesforce last upgraded it to — you can’t change this. But you can choose the retrieve or deploy metadata using any supported Metadata API version, and support for these continues for many years. Each API version will provide or expect the metadata in the format that was valid at that time.

In effect, the Metadata API allows us to make orgs pretend they’re in a past state and that certain things don’t yet exist, or exist in a different state. On the face of it, this might sound a little odd, but let’s explore how this functionality can be used to our benefit instead.

Potential problems with Salesforce API versioning

Most issues around platform versioning occur where a version control system (VCS) is in use. There’s a tension between the regular updates from Salesforce and the way a VCS will retain the state of files until they’re explicitly changed. Without careful management, control is lost.

Let’s look at some of the most common problems.

Stale metadata

Some teams choose not to put the package.xml under source control because the developers want to modify it locally without committing those changes.

For example, if adjusting a single Apex class and its associated test class locally, on deployment, the user wouldn’t want to deploy the whole application’s metadata back to the org, only the two amended classes. When committing those changes back to the VCS, it wouldn’t be desirable to also commit the package.xml file containing just those two classes, as any later branch then created would come with those two files pre-set in the package.xml file. The developer almost certainly would need to work on different components, and so would have to change the file contents again, resulting in an ongoing adjustment process.

Sometimes, users create a local package.xml file as they join a team or project, setting the API version to the most current version at that time, and the user doesn’t then increment the version, as no need to do so arises.

Alternatively, it might be that the user is proactive about updating the API version, but some metadata might have been initially created and committed to the VCS some time ago, and there’s been no reason for it to change since; no new functionality has been required on that component.

In either case, let’s assume that an item of metadata was retrieved using API version 50 and committed to the VCS at some point in the past.

Consider now that a subsequent Salesforce release — let’s use Winter ‘23/v56 as an example — introduces a new mandatory element to the Metadata API specification for that particular component — essentially a breaking change. When deploying from source control to a production org on Winter ‘23, as the VCS has no package.xml file for a tool such as Gearset to determine the relevant API version to use, the deployment will defer to the highest globally available version.

The problem here is that v56 of the Metadata API will demand the presence of the new mandatory element, which the version of the component stored in VCS doesn’t have. So, the deployment will start to fail as soon as Metadata API v56 is used.

Release crossover period

The same issue can occur when Salesforce is rolling out platform upgrades to orgs, and the package.xml file is not under version control.

Sandboxes are upgraded before production orgs, so customers can test out the new functionality and ensure no regressions are caused by the platform upgrade.

Salesforce also provides release preview sandboxes, since plenty of active development work is undertaken in development sandboxes and it would obviously be a blocker for the upgrade to introduce a regression defect during an active development window.

Release preview sandboxes are upgraded before non-release preview sandboxes. Any sandbox created or refreshed during the release preview window will be created on a preview instance, and these will continue to be upgraded in the preview window for future releases.

It’s best practice to manage sandbox refresh activities so that development environments are not on preview instances. But ideally teams will have at least one sandbox which is on a preview instance to enable release preview testing. This is where you can identify defects for Salesforce to fix before the release is rolled out to the non-preview sandboxes and ultimately to the production instances.

This staggered approach does mean that it’s possible for some development work to take place on orgs running a platform version one ahead of the version on which the production org is running.

If a user adopts any new components or functionality introduced by the new release, they need to update their local package.xml file to the latest version number in order to retrieve it successfully to their local development environment, and would then proceed to commit the new metadata to the VCS.

With no package.xml under version control, and the new release not being globally available, Gearset will defer to the last fully released version. This will cause deployments containing new metadata to fail, as it isn’t recognised by the target org.

Uncontrolled updates

Given the situations described above, it’d be reasonable to conclude that having the API version by way of the package.xml file under version control would be a good idea, and it certainly is the first step on the path to eliminating version compatibility issues. However, without careful control, problems can still arise even with the file committed to the repository.

Let’s go back to our first example where some metadata had been retrieved and committed to the VCS while API v50 was the latest. As time progresses, the package.xml file’s API version remains on v50 in the VCS, and isn’t changed so long as no problems are encountered.

In time though a new feature request requires another developer to use functionality which was released in a later version, v55. In order to get the correct details out of the org and deployed correctly from the relevant branch, they create the functionality in their development environment, update the API version to v55, pull the metadata from the org and commit it and the updated package.xml into VCS.

However, if at some point between v50 and v55, a breaking change was introduced into some other metadata type that exists in the repo, a full deployment of the branch will now fail, as the API version upgrade didn’t consider other components.

sfdx-project.json

If you use the SFDX source format, you won’t be using a package.xml file. But you may still run into the problems described above, as the API version in use is specified in sfdx-project.json rather than package.xml.

Having sfdx-project.json in the VCS is less problematic than package.xml because it doesn’t require a full list of components to be retrieved or deployed.

Commands such as sf project retrieve start and sf project deploy start will, by default, use the same Metadata SOAP API as third-party deployment tools and even change sets. Switches and arguments for these commands allow the user to define the metadata to retrieve or deploy, rather than having it listed out in the file, as per package.xml.

Take control with an API versioning strategy

It isn’t realistic to expect development or testing to change or stop every time there’s a platform release — a platform upgrade shouldn’t cause such upheaval. To combat the difficulties outlined above, the following steps will help you follow API versioning best practices.

Put API version under version control

The first step towards taking control of platform versions is to ensure that the file which determines the Metadata API version in use, whether this is package.xml or sfdx-project.json, is under version control. Having individual users with their own API versions locally means that you’ll likely end up with a repository containing components on a mixture of API versions and most likely some components with conflicting API requirements. In the worst case, this can mean having a branch that can’t be deployed in its entirety using a single API version.

When using package.xml, consider the remainder of the file when in the VCS — which types and members are defined in this version controlled file. Originally, these determined which components were to be retrieved from or deployed to the org in question — literally the contents of the Metadata API ‘package’.

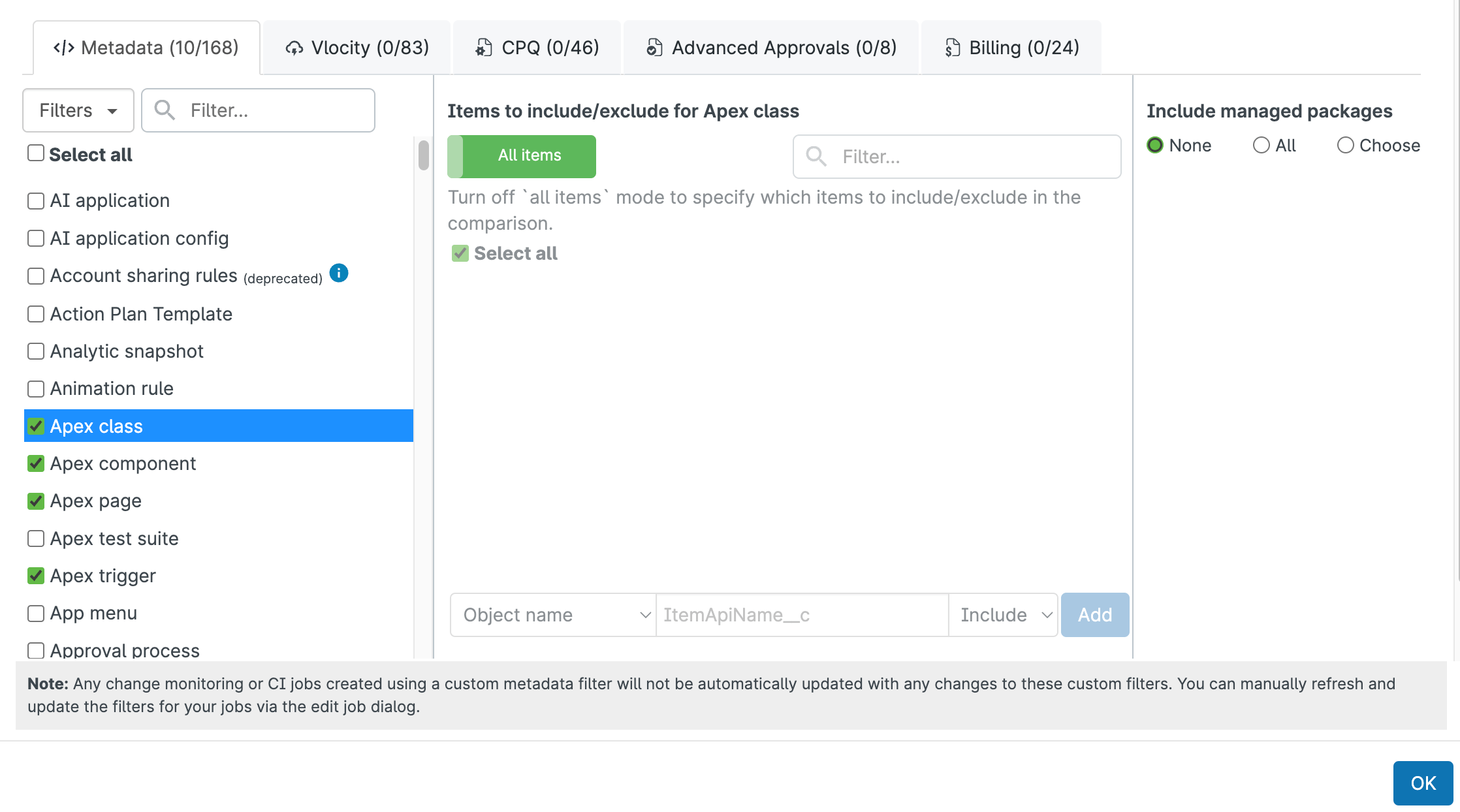

Within Gearset, the metadata filter takes this role, allowing more flexibility than simply enumerating the names of components. If present, the package.xml file can be used to filter the retrieved results down to just those which have been retrieved and are listed in the package, although this isn’t mandatory.

NOTE: Gearset’s comparisons have had an upgrade! We’re updating all our blog posts with the new UI images — but in the meantime, you can learn more about what’s changed.

If you’re using package.xml and want to implement API version control without needing the filtering behavior, a skeleton package.xml file, empty of <types> and just containing the <version> element can be put under source control, and as long as the option to filter the results by package.xml isn’t selected in Gearset, you will still gain the required control.

Baseline the repository

Baselining your repository involves setting a common Metadata API version against which all your components will successfully deploy. Depending on your starting point, this can take a while to achieve.

Which version isn’t as important as having a single, consistent API version. But by selecting an older Metadata API version, you risk the possibility that some of the components in your repository didn’t exist in that version. So it’s sensible to start with the highest globally available version, which will be the Metadata API version which corresponds to the Salesforce platform version on which your production environment is running.

By using the highest globally available version, the breaking changes should be limited to those components which are in your VCS with older metadata structures where new properties have been introduced since the metadata was last retrieved and committed.

On a dedicated feature branch, update the API version in use to this value. Then retrieve the metadata for all the components in the repository using the selected API version and commit the updated versions to the dedicated API baselining branch. This will enable a diff between the current state and the intended common API state, so you can identify any major changes that may require investigation or regression testing in order to ensure that functionality remains as expected.

Define a formal API upgrade process

Once you have the repository tied to a specific Metadata API version, you will lose all noise associated with different platform versions and older metadata in the repository.

Any retrieve operation initiated by any user will bring back the metadata according to the now-controlled API version. New team members, when initially cloning the repository, will receive the package.xml or sfdx-project.json file defining this version; previously, they may have set up their own local SFDX project, causing the API version to default to the latest, then cloned the folders containing the component metadata into that structure, for example.

Now there will just be two circumstances in which you would want to change the Metadata API version in use:

- New functionality. Your team wants to use new components or enhanced functionality on existing components provided by a more recent Salesforce platform version.

- Salesforce API retirement. Salesforce decides to retire the version of the Metadata API you’re using. This is unlikely to arise, as API version deprecation is rare. While Salesforce does retire API versions — anything below v31 is now deprecated — your team will almost certainly adopt new functionality before the API version they’re using is deprecated.

When you need to update the API version, you should follow a formal, documented and repeatable process.

When defining this process, you’ll want to consider the following points:

- The update process should be an isolated deliverable i.e. a user story or equivalent, and must contain no other changes.

- If your application release cycle contains regular fixed points rather than a continuous delivery model, the very start of a new release cycle is a good time for an API upgrade.

- Update the API version as held in

package.xml/sfdx-project.json. - Pull all metadata in the repository using the new API version.

- Regression test for breaking changes, e.g. check that a deployment using the new version is successful, run automated tests and conduct any appropriate manual testing. Having a comprehensive suite of automated tests will really help here.

- Push only the changes related to the API change to the upgrade branch, and any fixes made for regression issues.

- Commit and push these changes through the pipeline. If other work is already in progress, these must not be deemed to be complete until their branch has received the API update commit/s and validated with these included.

- Any processes that don’t use

package.xml/sfdx-project.jsonto determine API version should be updated, e.g. Gearset org monitoring jobs. - Baseline the time and effort required for an API upgrade, so you know how much time to carve out each time.

Reject ad-hoc API version changes

This is a critical part of retaining control over the process. With the package.xml/sfdx-project.json file in version control, you’ll gain visibility over situations where the version number has been changed.

Any pull requests which show a change to the version number should be investigated. The version number itself must not change outside the formal API upgrade process, but a change on an unrelated PR might be a simple misunderstanding, and the only necessary change is to revert the number for the PR to be accepted.

If a user finds that functionality they used to implement a requirement isn’t available on the existing controlled API version, they’ll want to update it in order to retrieve their metadata. This should trigger a conversation about the API version in use — ideally before the PR has been submitted with the version already updated.

In this situation, you mustn’t revert to a previous state where different components in the repo are in states from different Metadata API versions, so simply accepting the metadata but not the API version change isn’t an option.

Firstly, consider whether the work has to be done using the intended metadata components. Are there alternative complete solutions which involve items available in your currently used version? This would be a good initial compromise. If the alternative solution offers some form of performance improvement, then document it in such a way that it can be re-implemented after the next API upgrade.

If there’s no alternative solution, consider how critical the functionality is at the moment. Would not having the story implemented before an API upgrade harm the application, or can the story be delayed until such time as the application supports the necessary API version?

If not, it would be very useful to understand the amount of effort required for an API upgrade, either from the initial baselining process or any formal upgrade that has happened since. With this in mind, you can consider whether the effort needed and regression risk involved to upgrade the entire application in order to be able to implement this story is a valuable trade-off.

These are all subjective decisions, but should be considered if an item of new functionality would require an out-of-sequence API upgrade. As part of a retrospective, consider whether your API upgrade frequency is sufficient, especially if you’re encountering this situation on multiple occasions. Even if there’s some degree of time spent in an API upgrade which is at the cost of feature development for customers or users, if you find yourself having to implement an ad-hoc application-wide API upgrade under time pressure, a more regular cadence may well be a better option.

Continuous validation

A pull request might not always highlight when the API version has changed. A user may commit new metadata for a later API version without also committing the API change.

Unless one of the reviewers on a pull request spots that the new metadata relates to a more recent Metadata API version (the addition of a new metadata type folder would be a fairly obvious clue, but more subtle changes such as new mandatory properties in existing types are more difficult to spot), the chances are that the changes could be accepted into the branch and, with them, the breaking change.

Some process options will help your team identify a changed API version at the earliest possible opportunity.

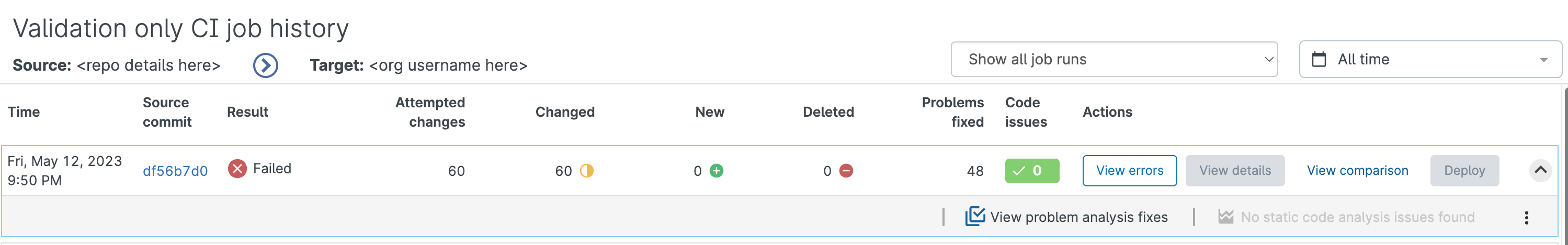

Having pull requests trigger an automated validation against the next Salesforce org will help your team catch issues with API versions. A validate-only CI job will run using the version defined in the

package.xmlorsfdx-project.jsonfile on the branch. This validation will fail if unknown metadata is present on the branch.

Less proactive is to set up a validate-only deployment job to check the main branch (or other target branch) against the next org in the pipeline. Either way, as soon as such a job runs after the feature branch with new metadata is merged, it will fail for the same reason as described above, and the appropriate remedial action can be taken; although in this situation, it’s a matter of recovery rather than of prevention.

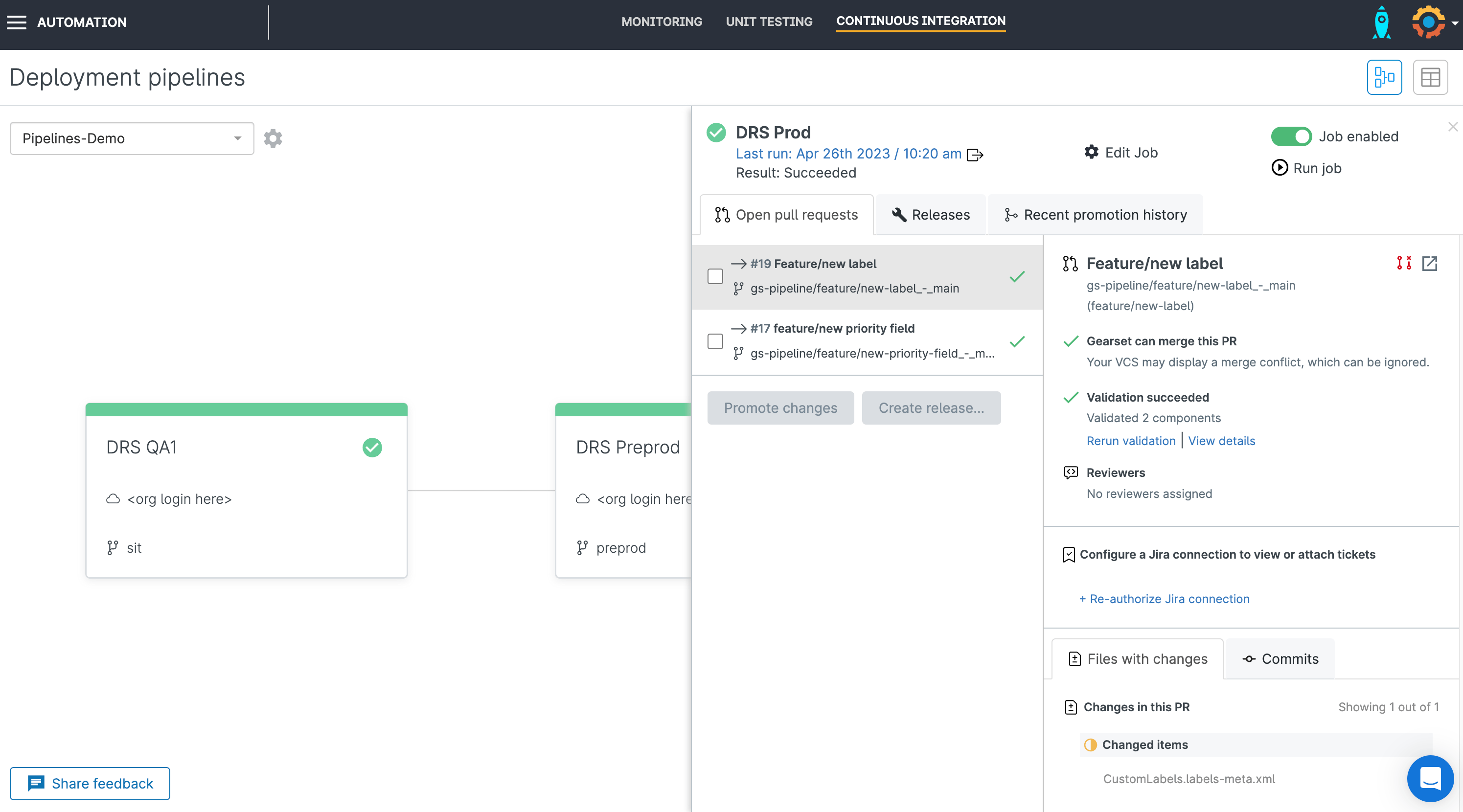

Gearset Pipelines, when configured with CI jobs which validate PRs targeting the relevant environment branch, will automatically validate the feature branch contents against the next org, ensuring that the breaking change is uncovered at the earliest possible opportunity.

Salesforce Apex API versions

As discussed above, each Apex class is assigned its own API version number to define which standard objects and fields are available to the code within the class. The potential issues with having classes with mixed Apex API versions are certainly smaller. For example, a typical issue would only occur if one class created an instance of a standard object defining certain values, and then passed it to another class on a lower API version which didn’t have access to some of those fields. The longer Apex class API versions are left between synchronisations, the greater the risk of this happening.

This is because the Apex API version assigned to a class is set at class creation time and defaults to the current platform version if created on an org directly, or to the default SFDX configuration value if created via SFDX. As time progresses and org platform versions increase, there will be a growing variance of these Apex API versions.

To avoid any potential issue with different class versions building up, it’s a good idea to also update the Apex API versions as part of the wider API upgrade process. The API version is contained within the apiVersion element in the file named <class name>.cls-meta.xml so can easily be upgraded and committed as part of the same upgrade exercise.

Master your API version management

By implementing the documented principles, adjusted for any local custom considerations, you’ll make the most of the functionality offered by Salesforce to take ownership of API version upgrades, removing the possibility of surprises and blockages from breaking changes introduced by Salesforce on their own schedule.

This will allow you to navigate the Salesforce release rollout windows with confidence, make sure you don’t get unexpected validation or deployment failures solely due to platform versioning, and focus on providing new features and functionality to your end users.

Gearset can help you implement the recommendations in this guide, so you have full control of Metadata API versions and keep on top of your release process. If you’re new to Gearset, start a free 30-day trial or reach out via the live chat to find out more.