To build a reliable, trustworthy agent, thorough testing is essential. While Agentforce Builder is great for exploring individual conversations, testing multiple scenarios manually can quickly become time-consuming.

Agentforce Testing Center makes structured, scalable testing a natural part of agent development. Designed for accuracy and iteration, it helps teams validate their agents, monitor performance, and deliver safer, more trustworthy experiences to end users.

With batch testing, you can evaluate large volumes of inputs in a single run — and by using generative AI to create test cases, you can cover more ground with less effort. The result is faster validation, shorter release cycles, and agents you can confidently put into production.

Why you should test your agents

Unlike traditional code, AI agents operate in a world of probabilities and interpretation. They draw from a vast knowledge base — spanning your org and any connected integrations — which gives them the power to deliver flexible, human-like responses. But that same flexibility also introduces a higher risk of inconsistency. Testing ensures your agents behave in ways that are consistently helpful, secure, and aligned with your business goals.

It’s not just about confirming that the agent responds correctly. Good testing also surfaces blind spots — where your prompts fall flat, responses sound a bit off, or edge cases trigger random actions. As agents become more integrated with tools like Salesforce Data Cloud, testing becomes critical for maintaining data integrity and user trust. Effective testing accelerates development and ensures teams can trust the quality of every agent interaction.

Testing in the AI agent lifecycle

Agent development isn’t linear. It’s a loop that relies on continuous feedback, iteration, and refinement. During the initial build, tests help validate prompt logic, response accuracy, and fallback behavior. Once the agent is integrated into a broader system, testing ensures it interacts correctly with real-world data, handles noisy or unexpected inputs, and responds in ways that align with your business goals.

In production, teams need to monitor AI agents for signs of drift, degraded performance, or shifting user behavior. With more than a third of teams aiming to enhance AI workflows in 2025, continuous oversight isn’t just best practice, it’s essential. Feedback — whether it’s collected from users, developers, or system logs — becomes essential input for the next round of improvements. That insight loops back into development, where new tests validate refined prompts, updated workflows, or adjusted data integrations.

Agentforce Maturity Model: From Readiness to Delivery

Agentforce Testing Center is purpose-built for this cyclical model. It provides a structured, repeatable way to test your agents at every stage — whether you’re prototyping new features, stress-testing live logic, or tracking long-term performance trends. By embedding testing into every phase of the agent lifecycle, teams can move faster with confidence, knowing their agents will continue to deliver reliable, accurate responses at scale.

What is Agentforce Testing Center?

Agentforce Testing Center is a dedicated environment for validating AI agents built within the broader Salesforce AI platform. Rather than relying on manual testing or fully external QA tools, it gives teams a scalable, integrated workspace for running structured tests, tracking results, and improving agent performance — all without exposing the agent to your end users.

Teams can simulate hundreds of interactions in a single run, measure response quality, and track how the agent performs across a wide range of inputs. This kind of batch testing helps teams move beyond anecdotal spot checks and toward comprehensive validation that’s fast, repeatable, and consistent.

Crucially, it’s not just about checking whether an agent gives the “right” answer. Testing Center offers visibility into how the agent arrives at that answer — how it interprets the user’s intent, how it applies internal logic or fallback behavior, and how it retrieves from data sources like Salesforce’s Data Cloud. Every test is logged in detail, providing a clear audit trail and enabling deep analysis of both successes and failures.

That level of visibility transforms testing from a checklist into a strategic advantage. You’re not just verifying outputs — you’re learning how your agent behaves, spotting patterns, and identifying opportunities to improve.

Core features of Agentforce Testing Center

Testing Center makes it possible to manage and validate AI agents at scale, without compromising on speed or quality.

AI-generated test cases. Automatically create test inputs based on the agent’s structure and logic, helping uncover edge cases and gaps you might otherwise miss. You can use synthetically generated data to simulate a wide range of real-world user inputs.

Sandbox environment support. Run tests in secure sandbox environments that mirror production settings — ideal for validating behavior without impacting end users.

Salesforce Data Cloud integration. Agentforce and Data Cloud work hand-in-hand by giving you the option to incorporate real-world, dynamic data into your test scenarios. This deep integration evaluates how context (like user history or profile details) affects agent responses.

Monitoring and observability. Transparent usage monitoring gives teams greater insight into agent behavior with detailed logs, interaction-level audit trails, and real-time performance stats.

Feedback tracking. Capture human-in-the-loop (HITL) feedback during tests to pinpoint issues and prioritize improvements.

Utterance analysis. Understand how specific inputs are interpreted and processed, helping to fine-tune natural language understanding.

Agent analytics. Track usage patterns, prompt performance, fallback frequency, and response accuracy across time — turning testing into a continuous feedback loop.

These features combine to give teams a structured, reliable way to test and refine their agents with confidence.

The real-world benefits of Agentforce Testing Center

Using Agentforce Testing Center can change how you approach agent development. It creates a safe, scalable testing process that supports fast iteration. You can build new prompts, run a batch of tests, and deploy improvements with confidence — all without the fear of breaking something in production.

Agentforce Testing Center gives you complete visibility into how your agent is actually performing. Rather than relying on anecdotal reports or scattered bug tickets, you get hard data — metrics, logs, and feedback — that reveal what’s working and what’s not. You can trace exactly why certain prompts fail, where fallbacks are triggered too often, or which responses are underperforming. That insight helps you prioritize fixes, fine-tune prompts, and improve overall agent quality over time.

Ultimately, this isn’t just about testing — it’s about delivering AI experiences your users can trust, which is the biggest issue companies face. With the right data and infrastructure in place, teams can shift from reactive troubleshooting to proactive optimization. In a world where governance, speed, and reliability are essential, Agentforce Testing Center gives you the level of control you need to scale with confidence.

What Agentforce Testing Center doesn’t cover (yet)

It’s worth noting that Agentforce Testing Center, while very powerful, isn’t a silver bullet. Yes, it delivers a robust framework for testing AI agents, but like any tool, it has its boundaries. It’s important to be aware of these limitations so you can design a testing strategy that fills the gaps and complements the tool’s strengths.

One of the key constraints is that it currently supports only single-turn interactions. It means Testing Center is great for evaluating how an agent responds to individual prompts in isolation — but if your agent relies on multi-turn conversations, context retention, or follow-up clarification logic, you’ll need to bring in additional tooling or manual testing approaches to validate those workflows. This is particularly relevant for support bots or assistants that guide users through complex, step-by-step processes.

Another consideration is the interpretive nature of the results. While Testing Center surfaces detailed data — including logs, fallback rates, and prompt-level outcomes — it doesn’t automatically diagnose problems or prescribe solutions. The insights are there, but making sense of them requires human analysis. Teams still need to review the patterns, map test results back to prompt behavior, and decide on the right improvements to make. Agentforce Testing Center isn’t a plug-and-play solution that replaces critical thinking — it’s a framework that supports it.

How Testing Center compares to other Agentforce testing approaches

If you’ve used Agent Builder’s built-in test features, you’ll know that manual testing can be effective — especially during early development or when debugging specific paths. However, it lacks scale and consistency. You might miss edge cases or find it hard to reproduce certain behaviors.

On the other end of the spectrum, some teams use third-party QA platforms for end-to-end testing. These offer broader test coverage but can be slower to set up, harder to maintain, and more expensive. Agentforce Testing Center sits comfortably in between — fast enough for daily use, powerful enough to reveal meaningful insights.

Agentforce testing tips

Thorough testing doesn’t just help you catch bugs — it’s how you build better agents, faster. Here’s how to make the most of Agentforce Testing Center with a structured, repeatable approach:

Identify key test case scenarios

Start by mapping out the most important things your agent should be able to handle. Focus on core tasks, high-traffic interactions, and any business-critical use cases. Then layer in variations, edge cases, and expected failure scenarios — especially if your agent relies on complex logic.

Create realistic test data

If your agent interacts with Salesforce data or external APIs, test it against inputs that reflect that complexity. This could include different user types, incomplete records, or unexpected values. The more accurately your test data mirrors production, the more reliable your results will be.

Document prompt paths and responses

Keep a record of how each test case maps to specific prompts, expected outcomes, and triggers. This will help you track changes over time, diagnose regressions, and maintain consistency as your agent evolves.

Analyze results to uncover trends

Use the Testing Center’s Agentforce analytics and logs to look for patterns — like recurring error messages, misunderstood inputs, or underperforming prompts. Don’t just focus on individual failures — look at what they’re telling you collectively.

Continuously iterate and refine

Testing isn’t a one-time job. As your agent develops, so should your test suite. Use insights from each round of testing to refine prompts, improve logic, and extend coverage. The best-performing agents are built through cycles of feedback and iteration.

How to use Agentforce Testing Center

With its intuitive interface and support for no-code tools, Agentforce Testing Center is accessible for both developers and admins alike. Once you’ve built your agent, here’s how to test it.

Open Testing Center

Head to your AI agent project within Agentforce. In the left-hand menu, search and select Testing Center. This is your control hub for managing and running test cases across your agent’s logic. If you’re testing an agent you’ve just built in Agent Builder, you will have the option to Batch Test before you activate the agent, which will take you to Testing Center.

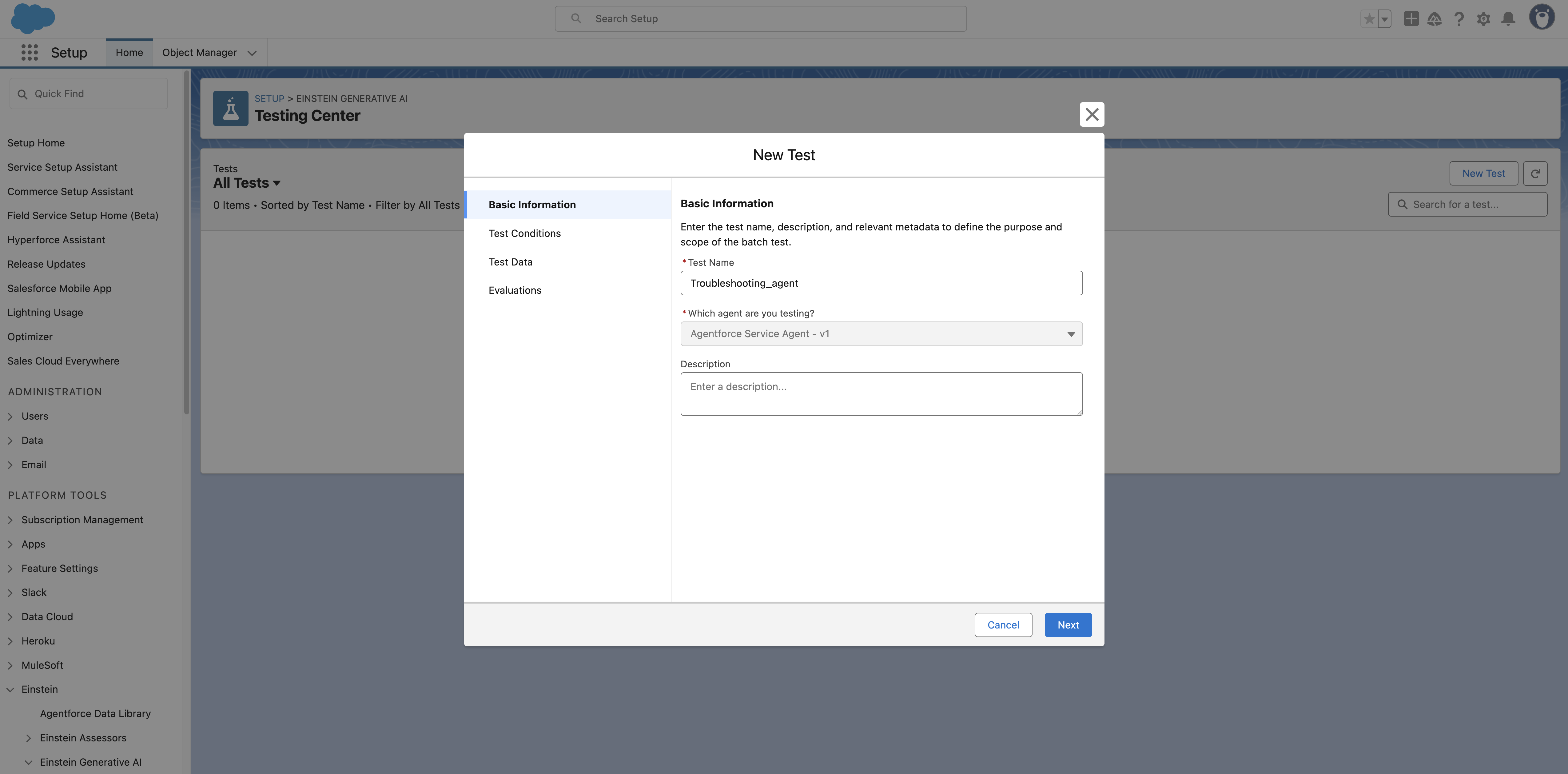

Name your test and choose the conditions

Click New Test and give it a name. Click Next, then choose the test conditions you want for your agent.

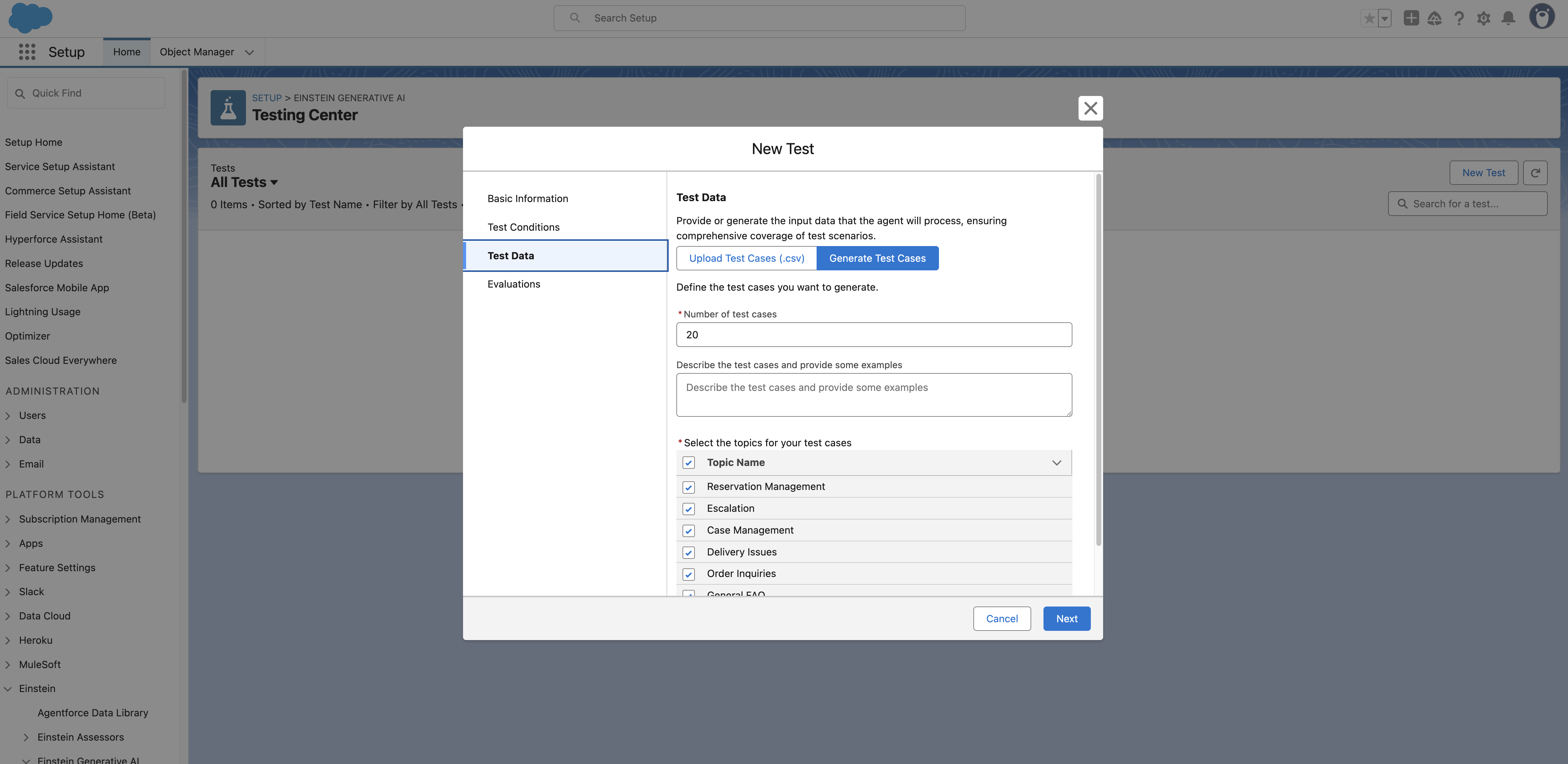

Configure your test data

You have two choices here:

Write custom test cases manually to target specific intents, prompts, or known edge cases.

Generate your test cases with AI, using your agent’s structure and training data. This is a quick way to simulate a wide range of real-world interactions and uncover potential blind spots.

You can mix and match both approaches depending on the depth and control you need.

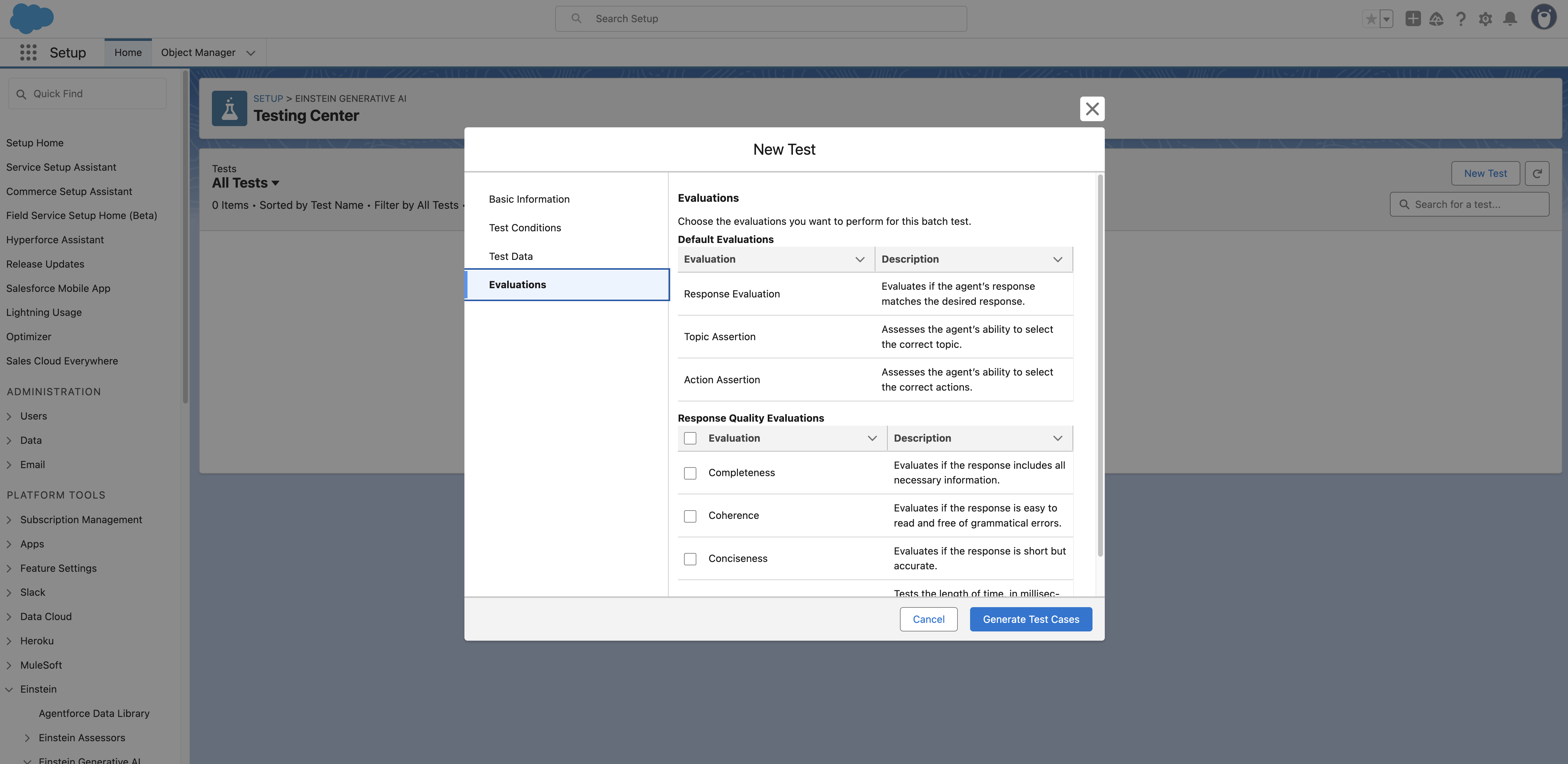

Choose your evaluations

Choose exactly what you want the test to focus on — whether that’s completeness of answers or the time it takes for the agent to respond. Once you’re done, click Generate test cases. You’ll be taken to the Testing Center.

Run your tests

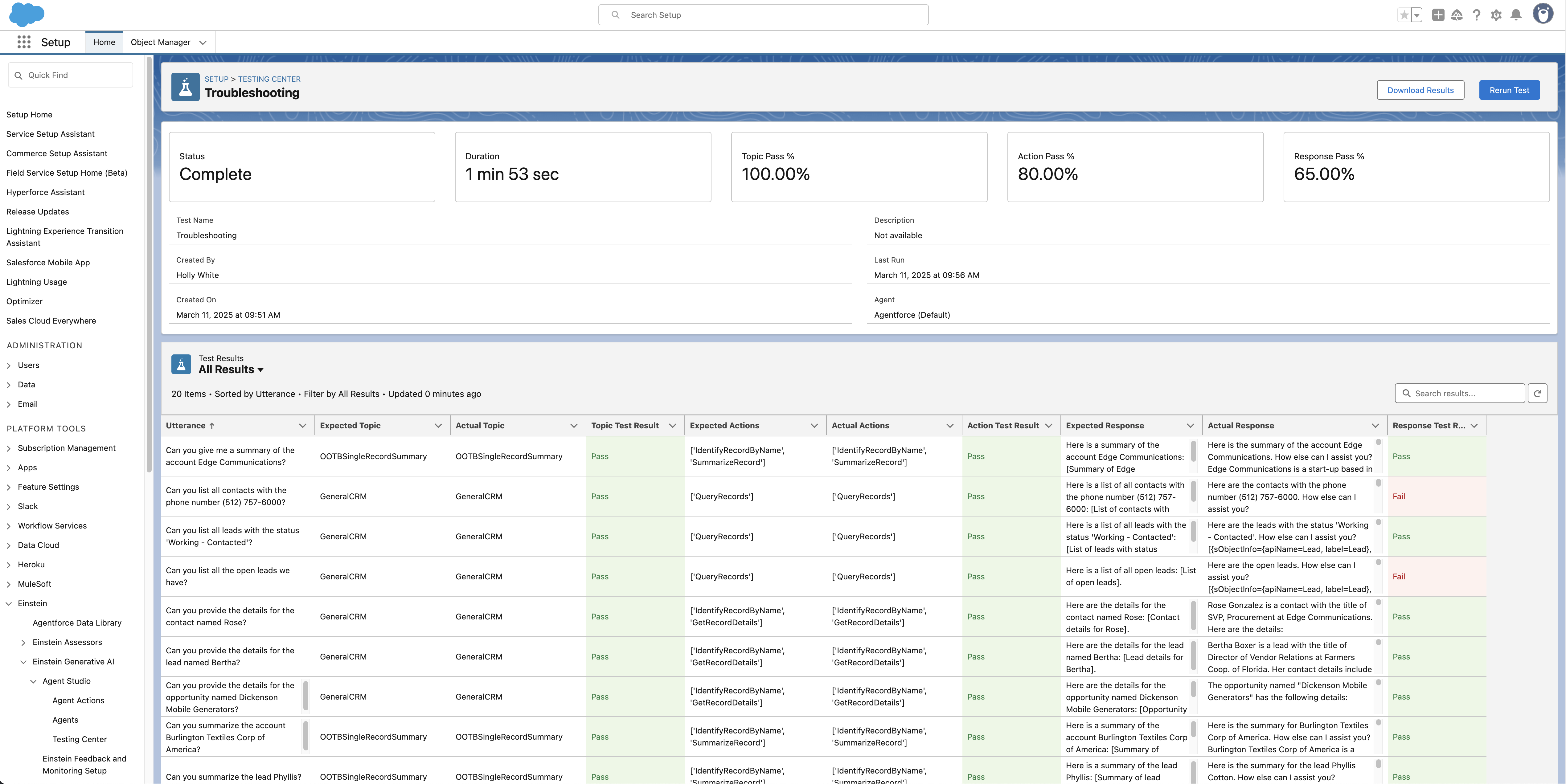

Once your test cases have been generated (you might need to refresh the page after a few minutes), click Run Test Suite to launch your tests. Once your tests have finished, you will have the ability to analyze the results.

Iterate and retest

Update your agent’s prompts, fallback logic, or integrations based on what you’ve learned. Then re-run your test cases to validate the changes. Over time, your test suite becomes an evolving quality gate — helping you deploy with confidence, every time.

Boost agent performance with better testing

Agentforce makes it possible to build smarter, more responsive AI agents inside Salesforce — but managing and validating those agents across each stage of the DevOps lifecycle still requires a reliable, test-driven process. With support for end-to-end AI agent lifecycle management, Agentforce Testing Center gives teams the tools they need to test thoroughly, iterate quickly, and deploy with confidence — all without touching the production org’s data. This structured approach to agentic lifecycle management tools ensures long-term scalability and reliability in your Agentforce agents.

What’s next? You can explore more about Agentforce or learn how to build and deploy agents reliably with Gearset.